Preface

Domain-Driven Design makes available a set of principles, patterns and techniques that can help subject-matter experts, architects, developers and other team members to work together and decompose complex systems into well-factored, collaborating, loosely coupled subsystems. While Eric Evans introduced these concepts in the early 2000s, in a lot of ways, these principles were way ahead of their time. We were firmly in the age of the monolith, service oriented architectures (SOA) as a concept was just starting to take root, the cloud, microservices, continuous delivery, etc. didn’t even exist yet! While it was relatively easy to adopt some of its tactical aspects, the strategic side of domain-driven design was still seen as an unjustifiable overhead for the most part.

Fast-forward to today, we are building our most complex software solutions ever, with even more complex organization and team structures to cope. Also, the use of the public cloud is almost a given. This has given rise to a situation where distributed teams and applications are almost a norm. Also, we are also in an age where applications from an earlier generation need to be modernized. All this has resulted in the principles of DDD, specifically the strategic elements gaining a lot of prominence.

We have been practitioners of these concepts and have gained valuable insights from our experiences. Over the years, we have seen a number of advancements that have made adoption of DDD at scale, a viable option. This book is a distillation of all our collective experiences. While we have drawn a lot of inspiration from earlier works on the subject, we have been very conscious to apply a practitioner’s mindset so that we lower the barrier for teams looking to sustain and thrive in their journey of building complex, distributed software.

Foreword

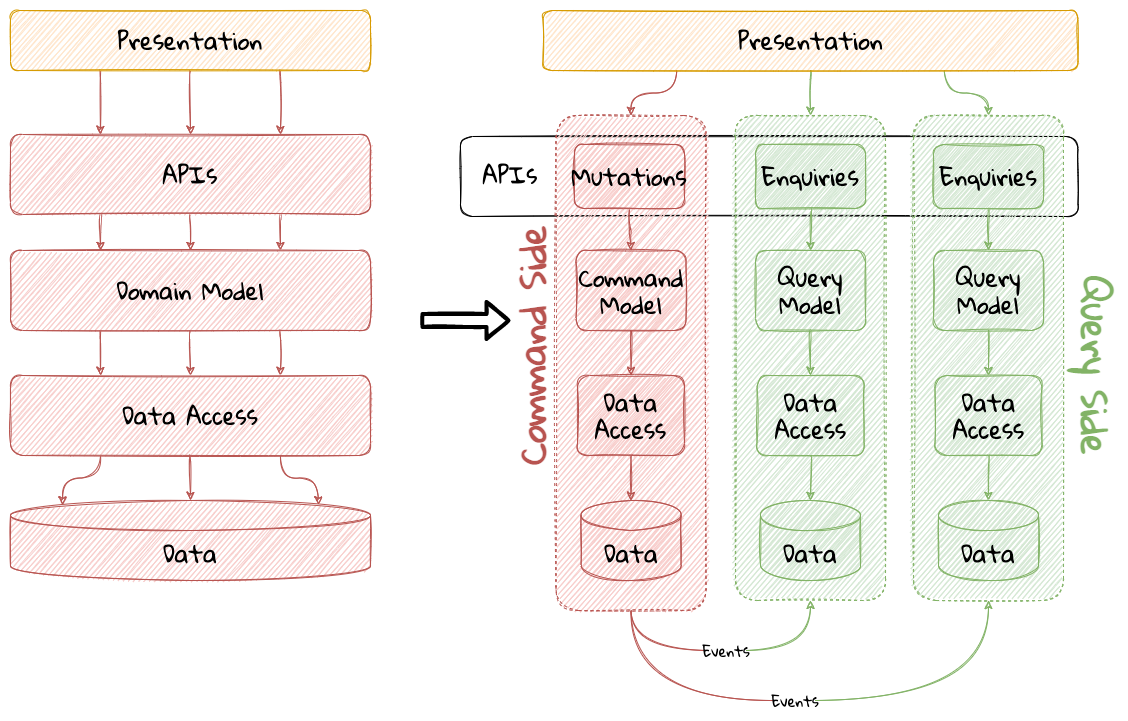

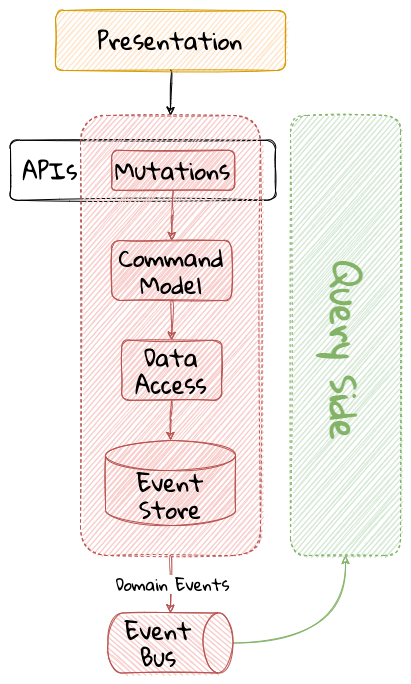

Ever since my first encounter with DDD (Domain-Driven Design) in 2008, I have been convinced that this is how to approach the design of complex systems or systems in complex environments. While DDD gives an excellent foundation, I have found that the combination with CQRS (Command-Query Responsibility Separation) can be even more powerful, albeit challenging to implement.

The library of examples that I started to publish in 2009 evolved into a framework, Axon Framework, as early adopters began sharing their experiences using it. One of those early adopters was Prem, using it in a large and complex project at a financial institution.

With the rising popularity of microservices, DDD and CQRS have shown to be essential design principles. Someone once jokingly said: “there are three types of developers implementing microservices. Those who use DDD, those who don’t realize they do, and those who fail.” With the demand for more real-time systems and the rise in popularity of event-driven systems, Event Sourcing also gained more traction. This push for event-driven systems has led to several interesting design techniques that take these events as the very starting point for exploring a system’s behavior.

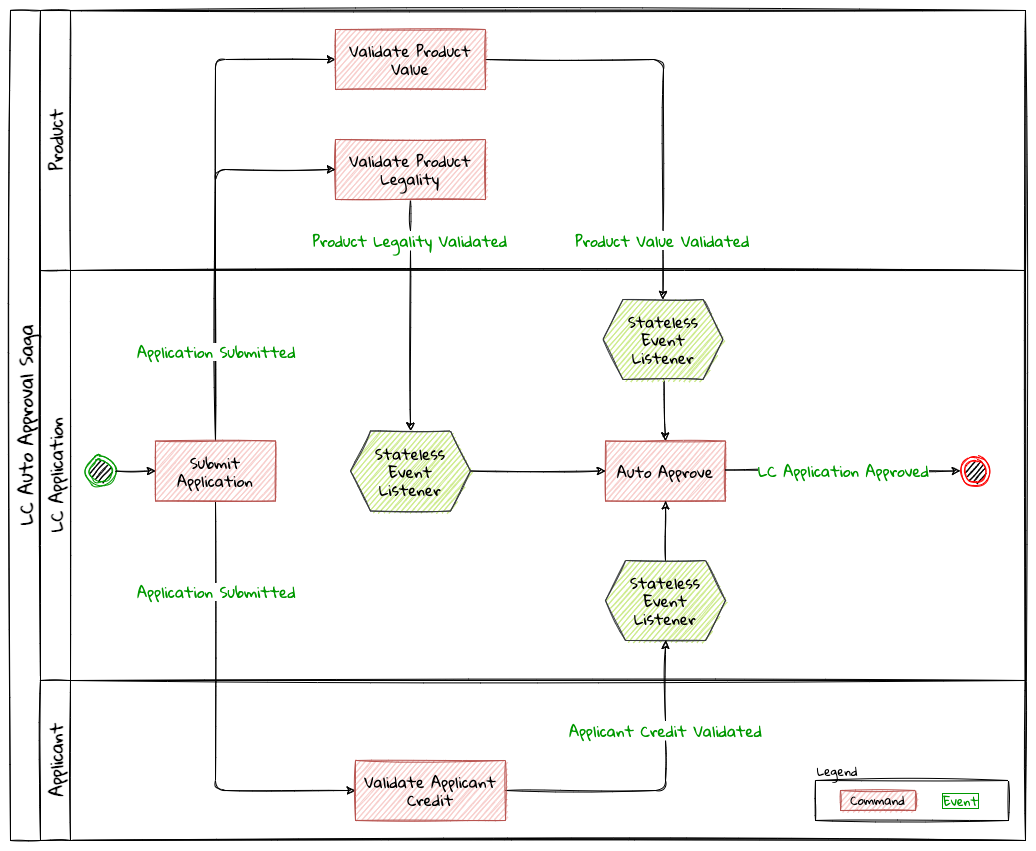

While I consider the famous “Blue Book” by Eric Evans a masterpiece that aged very well, it also stays very much in the abstract. Over the years, concepts and practices have been refined and adapted to changes in how we use technology. They are described in numerous resources scattered across the internet, making them hard to find and distill by those venturing into these realms for the first time. Prem and Karthik do an outstanding job taking you on the journey from the essential concepts of Domain-Driven Design, via the design practices, to the actual implementation of a system. Their complete and pragmatic approach makes this book an excellent starting point for anyone exploring new approaches to complex system design. It’s the book I wish I had when I started my journey…

— Allard Buijze

CTO and Founder of AxonIQ

Creator of Axon Framework

The ideas behind DDD (Domain-driven Design) have an outsized influence on software architecture and the way we think about software projects. “Ubiquitous Language” is a term defined by DDD, describing the practice of creating a more precise language when discussing design in software projects. However, DDD itself has provided a ubiquitous language for software architecture: most discussions about microservices touch on terms originating in the DDD world.

However, the book that started it all, Domain-driven Design by Eric Evans, falls into the category of what Mark Twain called a classic: “a book that everyone wants to have read.” The truth of the matter is that, while the book is packed full of influential ideas, it is quite abstract for readers looking for advice on how to build software. That doesn’t indicate a deficiency in the source –- it is designed to cover broad concepts, which encompasses many facets of design, including but not limited to software development.

However, when developers need to utilize these ideas in a practical sense, like a concrete project, the abstract doesn’t help -– they need a practitioners guide, which readers now hold in their hands. This book does an excellent job translating the concepts of DDD into useful overviews and concrete advice on how to implement the ideas. For example, a pertinent question that developers have that is unanswered in the DDD book: which is better to implement a project, imperative or functional programming style? Each has trade-offs; this book helps teams make those kinds of implementation decisions. This book covers multiple perspectives on applying DDD to Java projects, including foundational material about how DDD and agile engineering practices intersect in modern projects.

While many books exist that cover DDD and software architecture independently, this book does an excellent job mapping one to another, describing how the design influence of DDD maps to a number of different architecture topologies. For example, it describes how teams can reconcile bounded contexts with layered and other architecture styles.

Part one of this book covers many foundational details of the book, including technical and design details. Part two covers Real World DDD, covering how to apply the abstract ideas in a more concrete realm. It covers everything from how to discover and document the domain to how to map real-world concepts into DDD concepts. For example, it does an excellent job describing aggregates, their design, and relationships.

Part three covers a topic dear to my heart as the author of Building Evolutionary Architectures, the evolution of software. Software products constantly evolve, including new features and capabilities. Therefore, software development isn’t a static process–it must evolve along multiple dimensions to remain successful. This book contains a number of useful evolution patterns, covering both the domain and architecture characteristics.

Towards the ideal of creating a practitioner’s guide, the book also covers engineering practicalities such as logging, testing, versioning, and a host of other practical considerations that definitely fall outside the scope of the DDD book but teams need.

DDD offers a host of conceptual ideas but lacks implementation details. This book serves as an excellent bridge between abstract and implementation. While the focus is on Java, the content is broad enough for any practitioner to get a good overview of the many important considerations for DDD projects. In fact, each specific technology stack would benefit from having a practitioners guide such as this one.

— Neal Ford

Director / Software Architect / Meme Wrangler

Thoughtworks, Inc.

The early 2000’s were a dynamic time in the software industry. A number of luminaries were exploring lightweight “agile” processes that had the effect of converting software engineering from a paint-by-numbers activity into first class knowledge work. Agile methodologies like eXtreme Programming were natural extensions of arguments put forth by Jack Reeves in his 1992 C++ Journal article What is Software Design, which claimed that source code – not design docs or architecture diagrams – was the only equivalent to design specifications found in other engineering disciplines. The split between design and manufacturing still exists, of course, but with software, we don’t need people for manufacturing. The compiler does it for us, which means that all work the people do is design work, and agile processes pushed the new generation of software engineers into messy design conversations with “the business.”

The turn of the century also corresponded with the dotcom boom (and subsequent bust) as user interactions shifted from desktop applications to the web, creating technical scaling problems orders of magnitude beyond what the industry had faced up to that point. Distributed technologies weren’t exactly new, but the ever-increasing technical complexity needed to solve problems of scale required new architectural approaches and created a demand for software engineering talent.

It was in that environment that Eric Evans published his famous “blue book,” introducing the concept of domain-driven design (DDD) in 2003. In it, Evans gave us a set of techniques that directly mapped the design aspects of software development onto the way we wrote source code, and patterns that helped us manage technical complexity at scale.

It is precisely because DDD has aged so well that we forget how much the world has changed since it was first published. User behavior changes triggered by the introduction of the smartphones pushed organizations to externalize integration interfaces that had previously been hidden inside an internal network, previously accessed perhaps by a public website hosted in a DMZ but not by external users directly. Continuous delivery accelerated the pace of software change and, as a consequence, the pace of the design process. Microservices and modern event-driven architectures created architectural patterns that enabled more software design activities to happen in parallel as organizations looked to scale the throughput of delivery. Prem and Karthik have been on the leading edge of many of these changes, well-networked in the innovation crucible of Thoughtworks that helped name and evangelize some of these techniques, and directly applying them in large organizations eager to modernize.

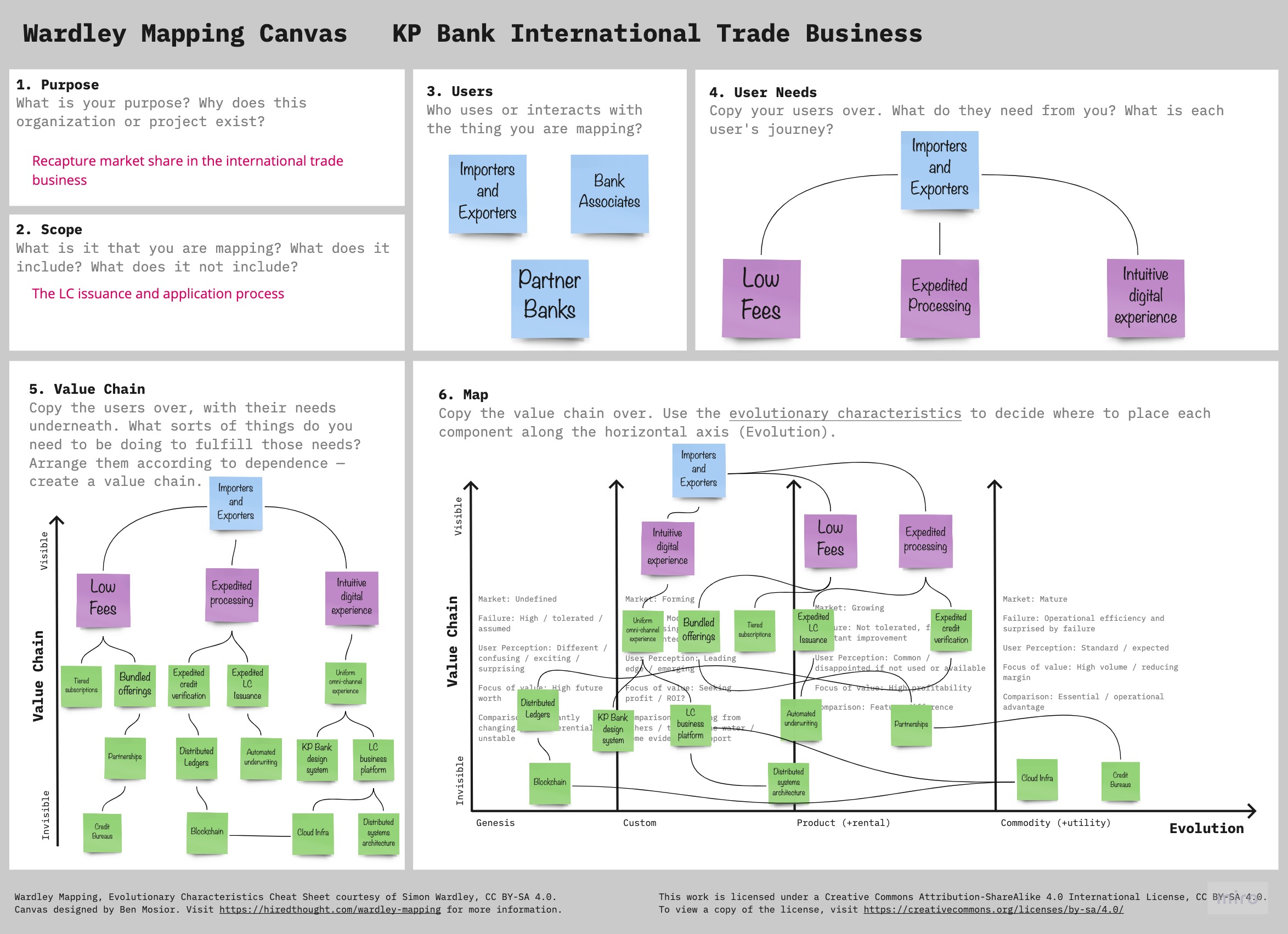

DDD remains as relevant today as it did when Evans published his blue book because it directly confronts the root causes of why software design is hard: creating a shared understanding of the problem and modularizing the architecture in a way that communicates that understanding in code. Karthik and Prem share important updates on both fronts: techniques like Wardley mapping, EventStorming, and domain storytelling to create the shared understanding, and an updated view of how DDD patterns apply with modern architectural approaches. This is a book full of lessons learned in the trenches from experienced practitioners, with practical lessons for us all. I’ve personally sharpened my own understanding while reviewing the book, and I’m confident you will too.

— Brandon Byars

Head of Technology, Thoughtworks

Creator of Mountebank

Who This Book Is For

This book is written keeping a diverse set of roles and skills in mind. While the concepts of DDD have been in existence for a long time, practical application and scaling has been a challenge, arguably due to a dearth of practical techniques, tools and real-world examples that bring all these concepts together as a cohesive whole. Successful application of these principles requires strong collaboration from a varied set of roles and disciplines from across the organization — ranging from executives, business experts, product owners, business analysts, architects, developers, testers, operators, etc.

Here is a quick summary of reader personas and what they will gain from reading this book:

-

Executives and business experts should read this book so that they can articulate their vision and the core concepts that justify the need for the solution. Techniques will allow them to do this in an expedient manner and also gain empathy towards what it takes to implement changes quickly and reliably.

-

Product owners should read this book so that they can act as an effective facilitator when communicating with both business and technical team members while making sure that there is no loss in translation.

-

Architects should read this book so that they gain an appreciation of the fact that it is of utmost importance to understand the problem before thinking of a solution. They will also gain an appreciation of various architecture patterns and how they play in conjunction with DDD principles.

-

Developers will be able to put their knowledge to work with this practical guide to create elegant software designs that are pleasant to work with and easy to work with and reason about.

The book provides a hands-on approach on how to gather requirements effectively, promote a shared understanding among all team members in order to implement solutions that will be able to withstand the test of dynamically evolving business ecosystem.

What this book covers

Chapter 1, The rationale for domain-driven design, examines how the practice of Domain-Driven Design provides a set of guidelines and techniques to improve the odds of success in our favor. While Eric Evans wrote his classic book on the subject way back in 2003, we look at how that work is extremely relevant in today’s times. We will also introduce the elements of strategic and tactical DDD.

Chapter 2, Where and how does DDD fit?, examines how DDD compares with several of these architecture styles and how/where it fits in the overall scheme of things when crafting a software solution.

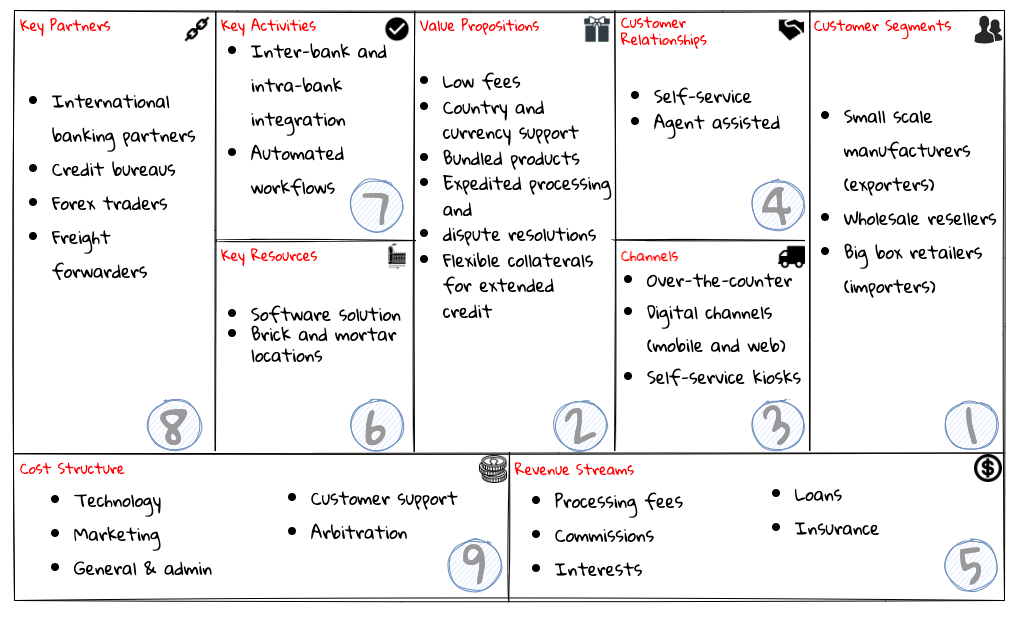

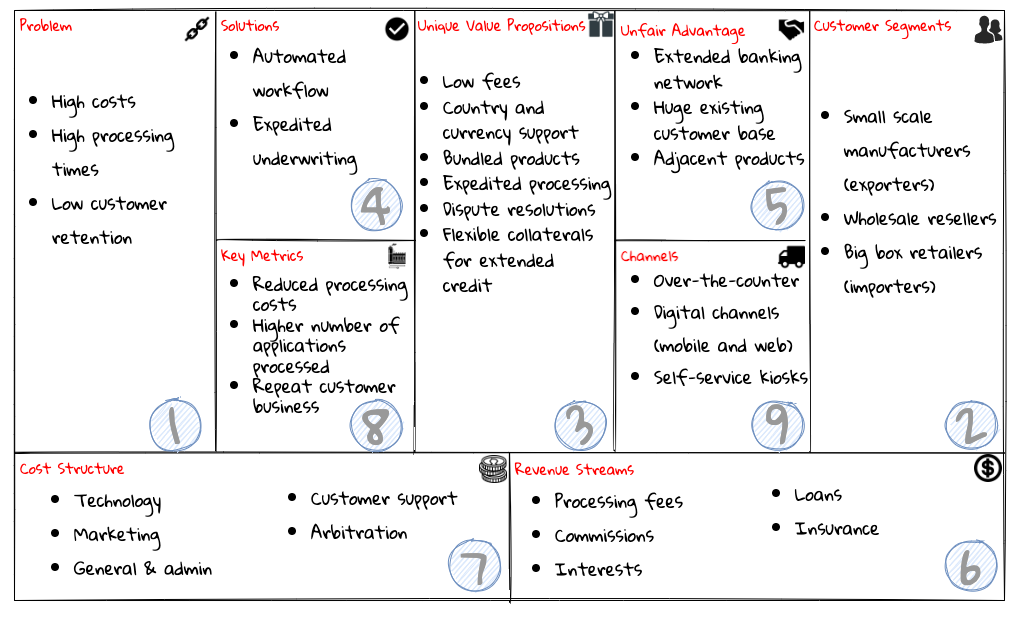

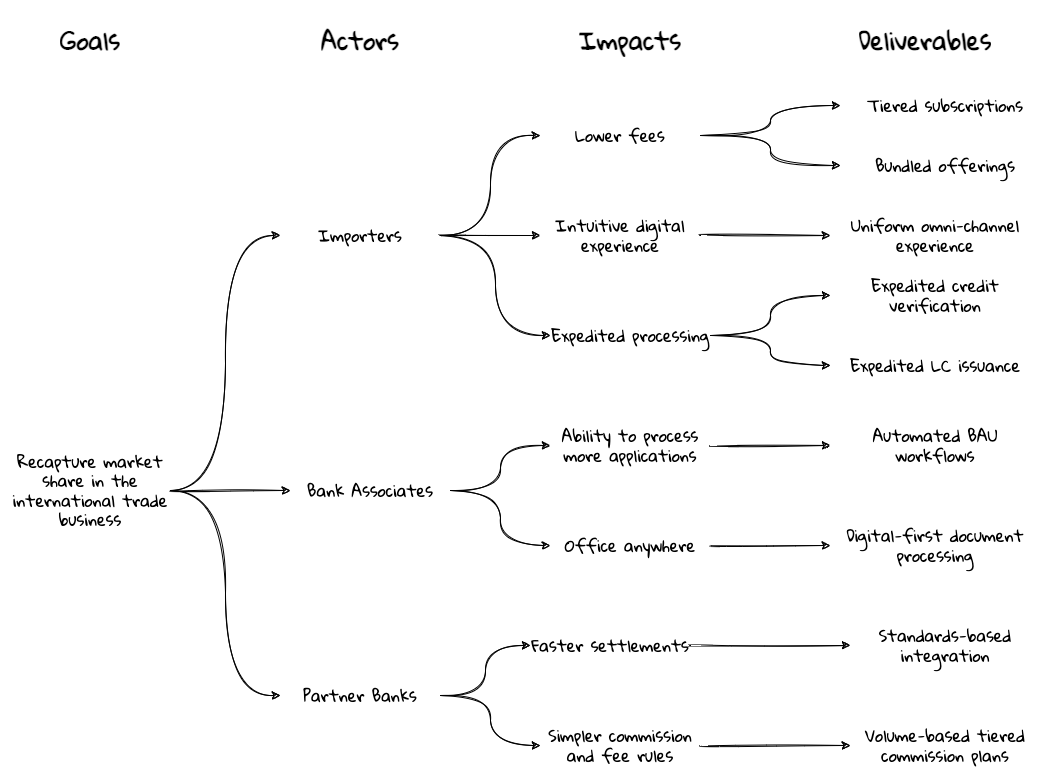

Chapter 3, Understanding the domain, introduces the sample domain (International Trade) at a fictitious KP bank. We also examine how we can get started with strategic design using techniques like business model canvas, impact maps, and Wardley maps.

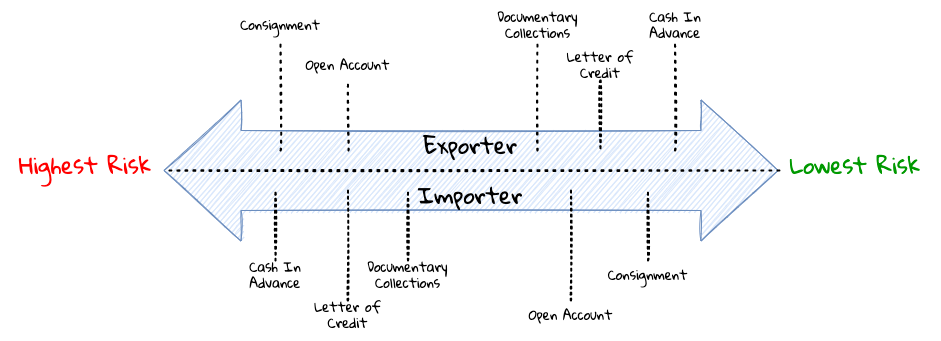

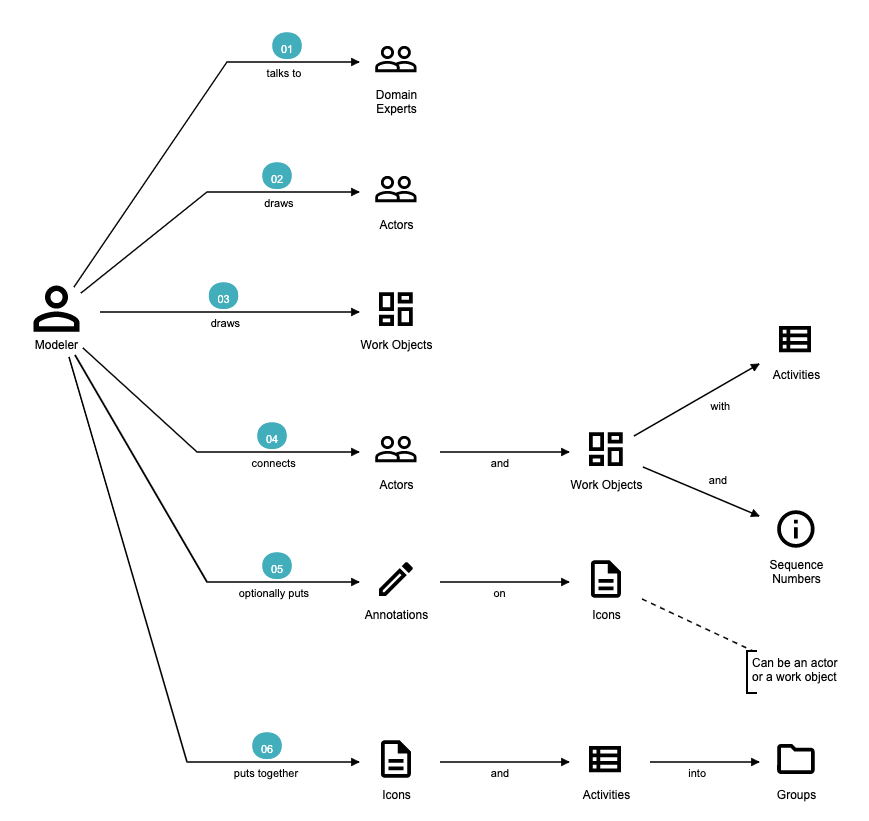

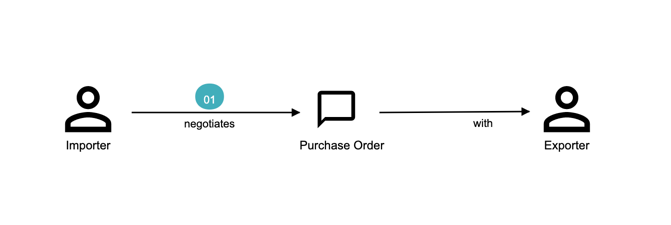

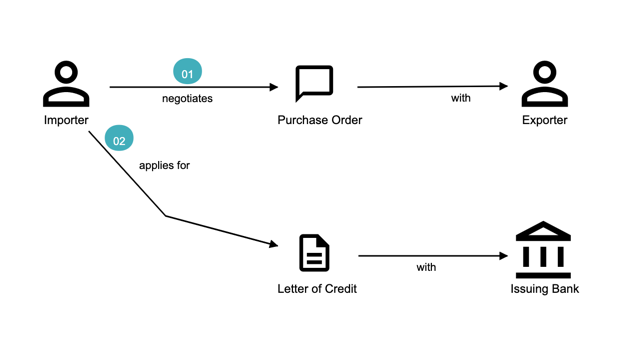

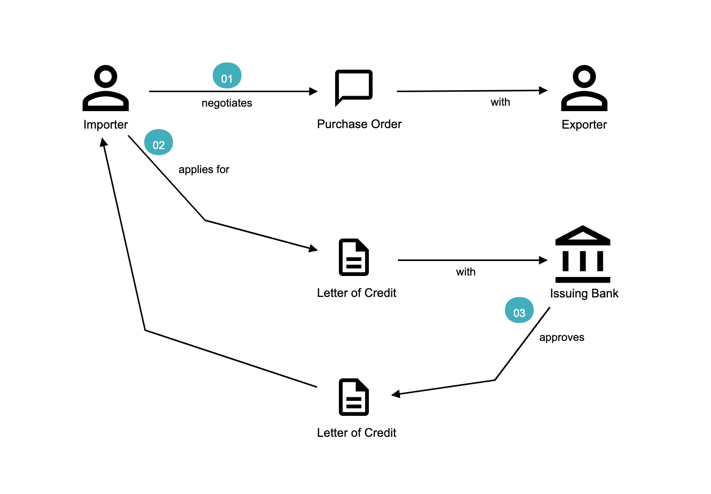

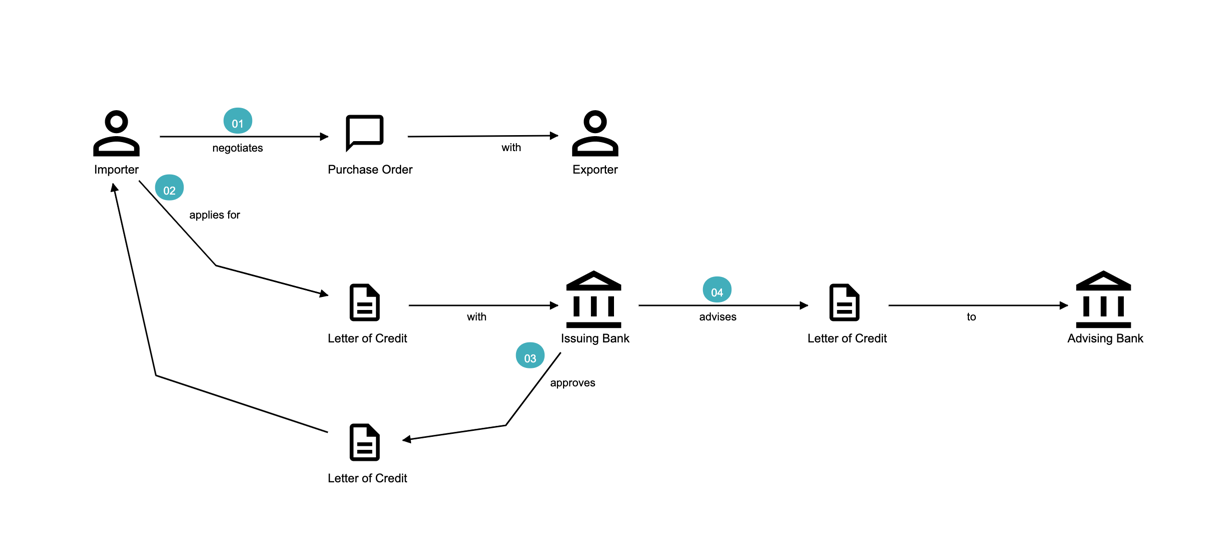

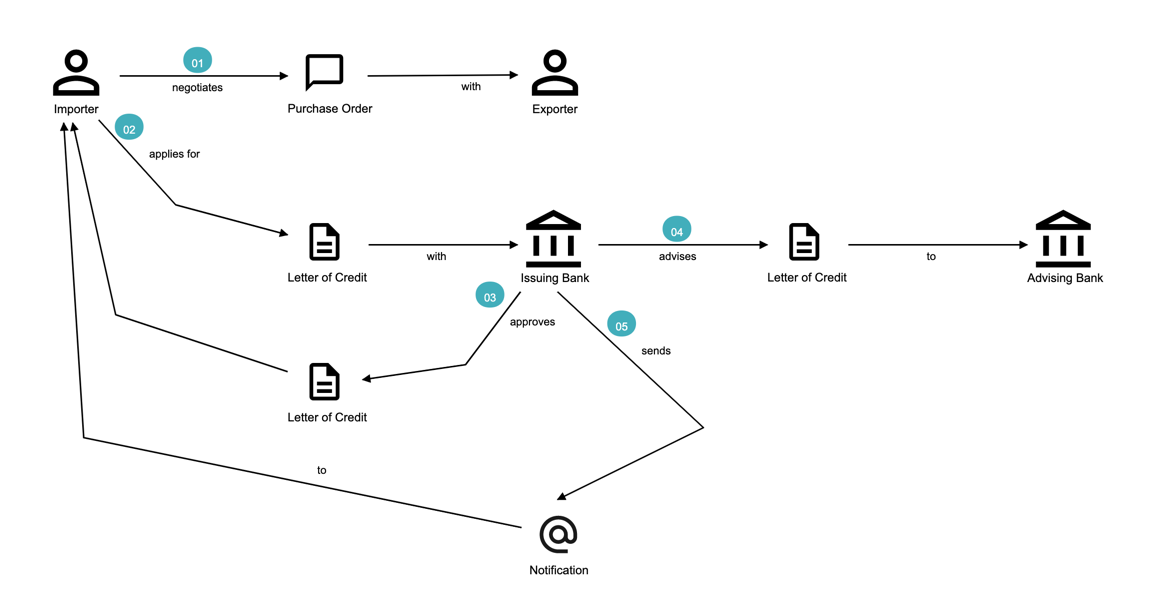

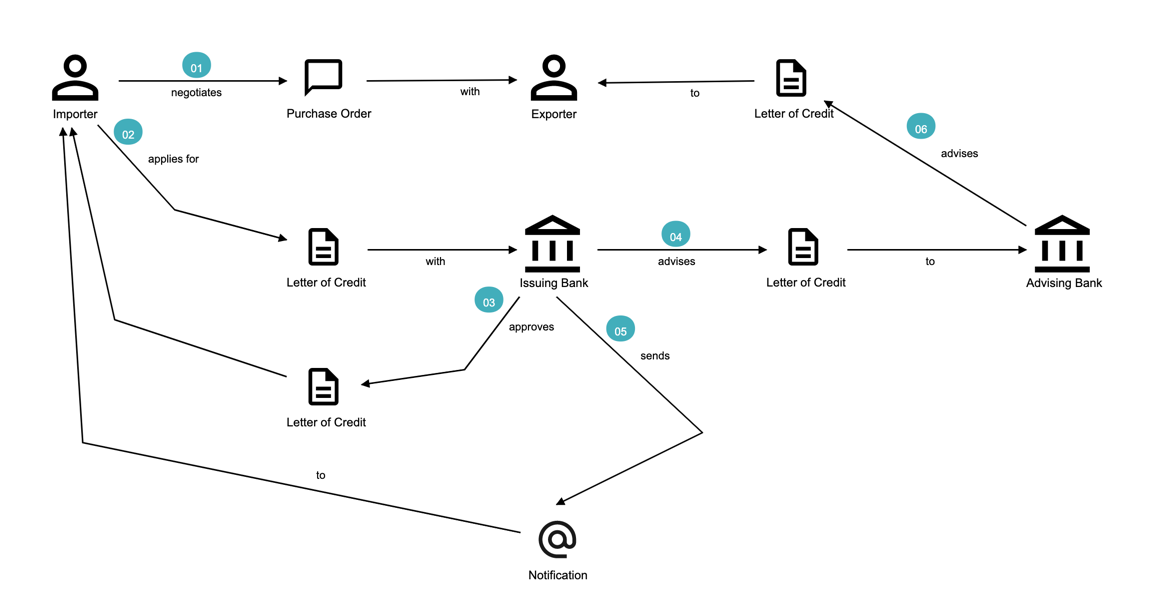

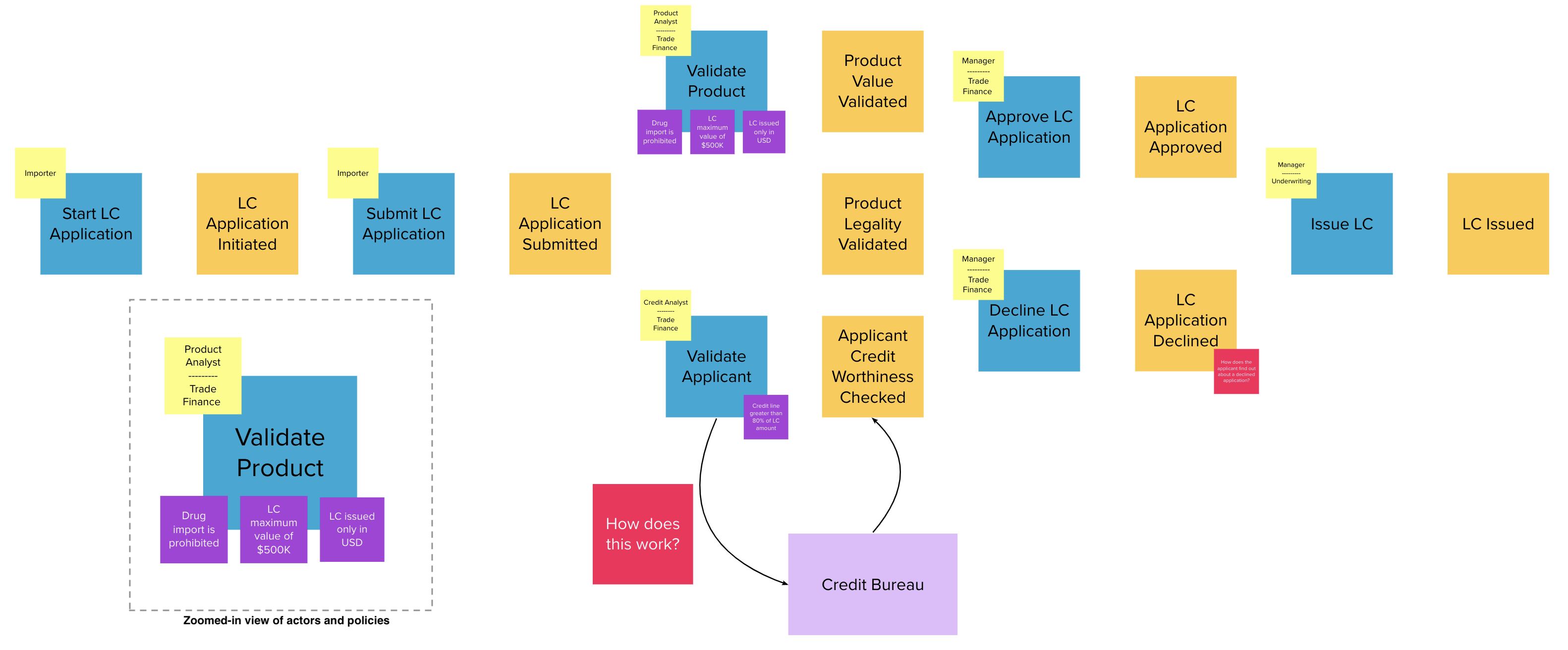

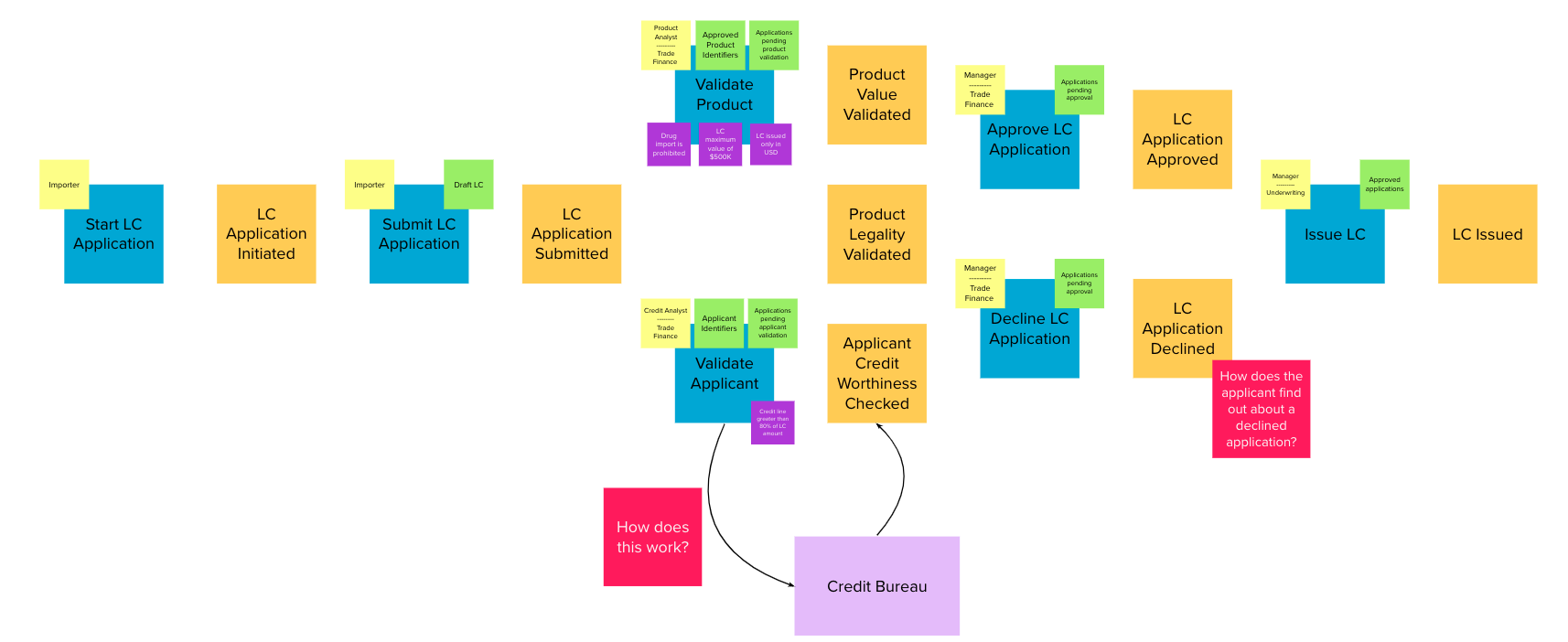

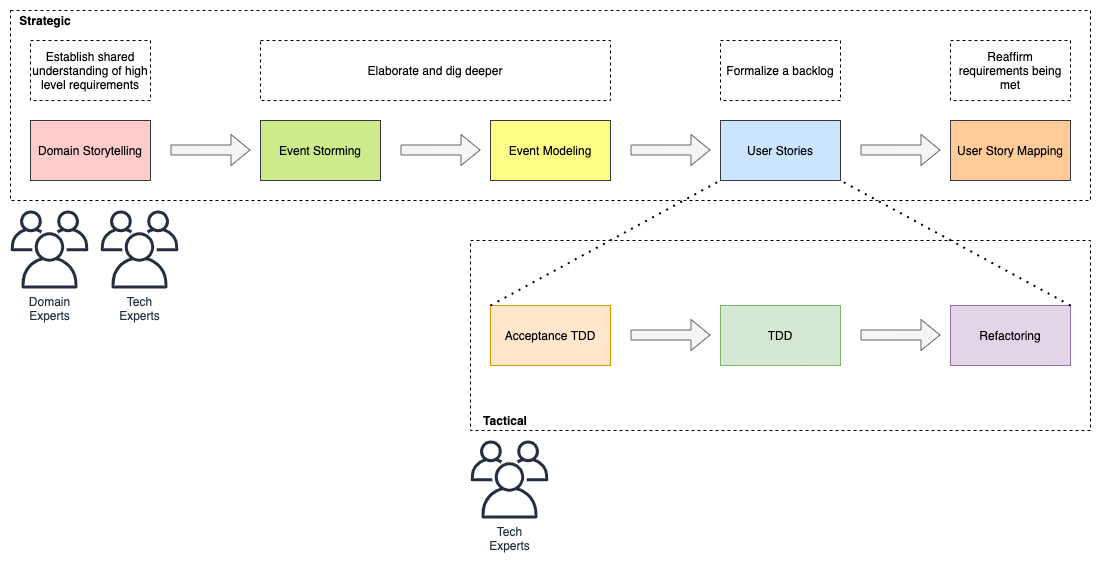

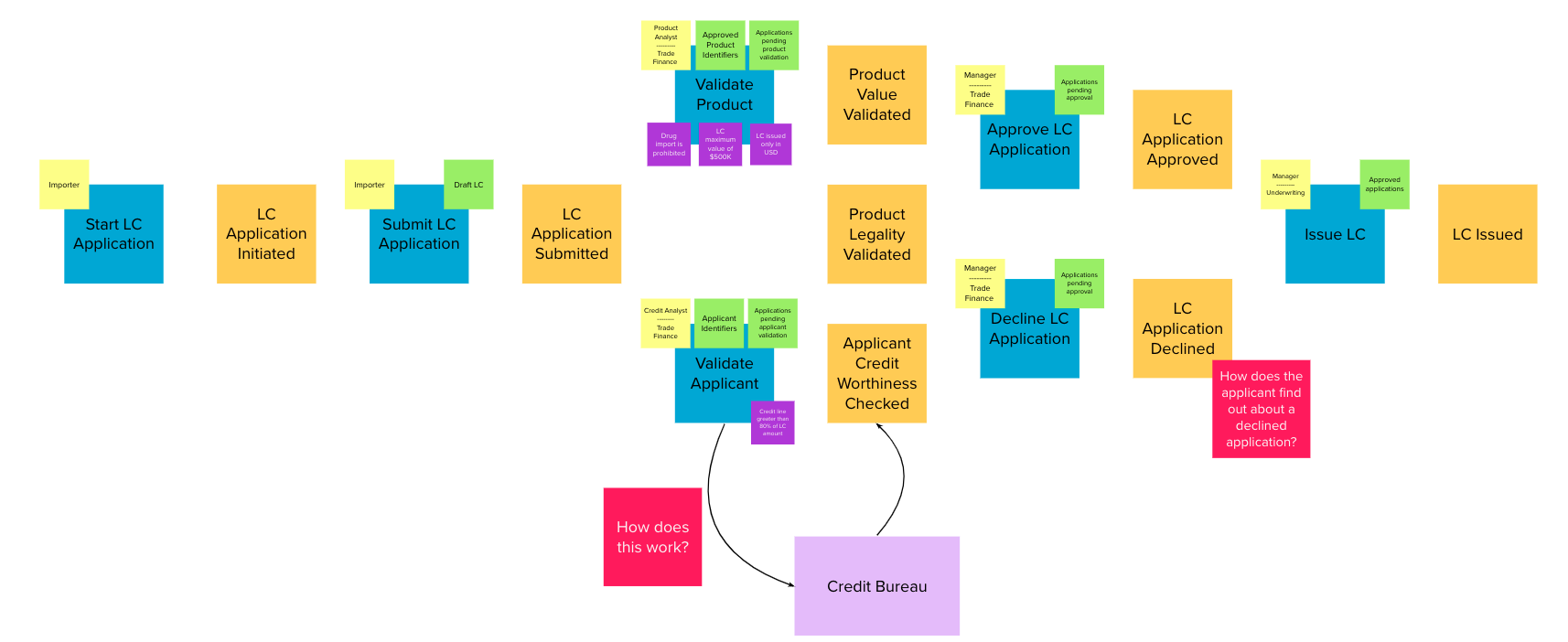

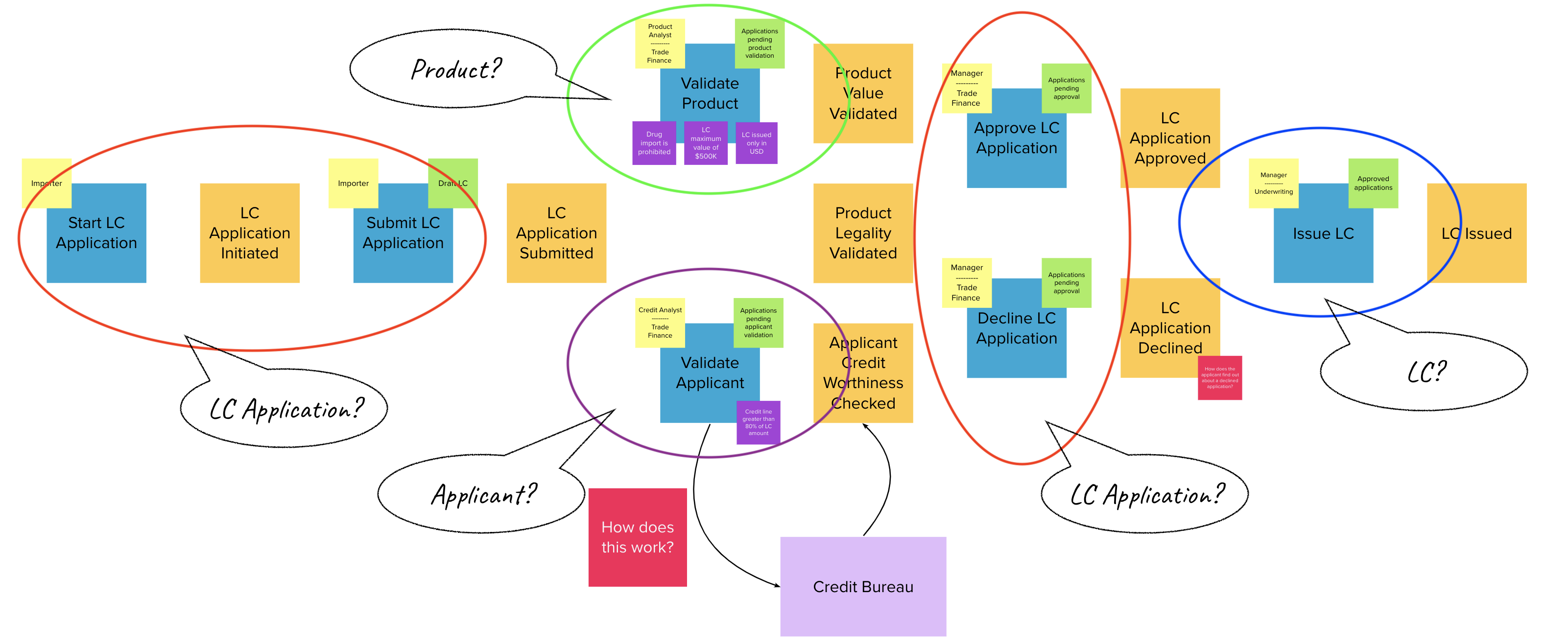

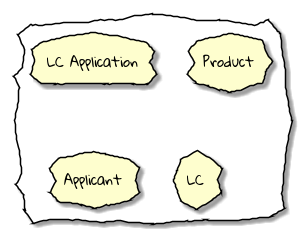

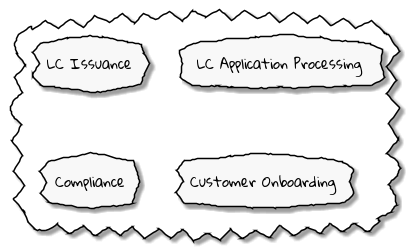

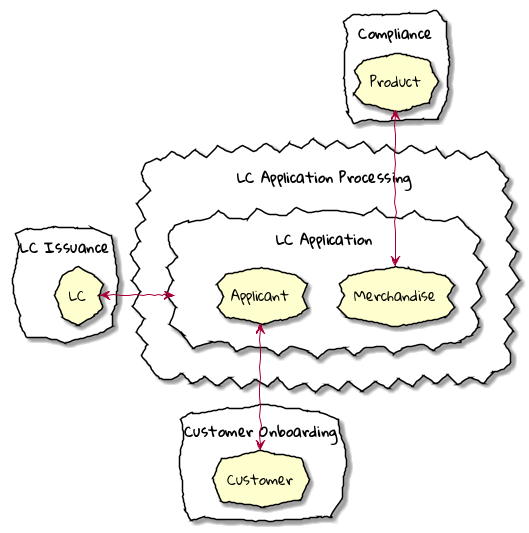

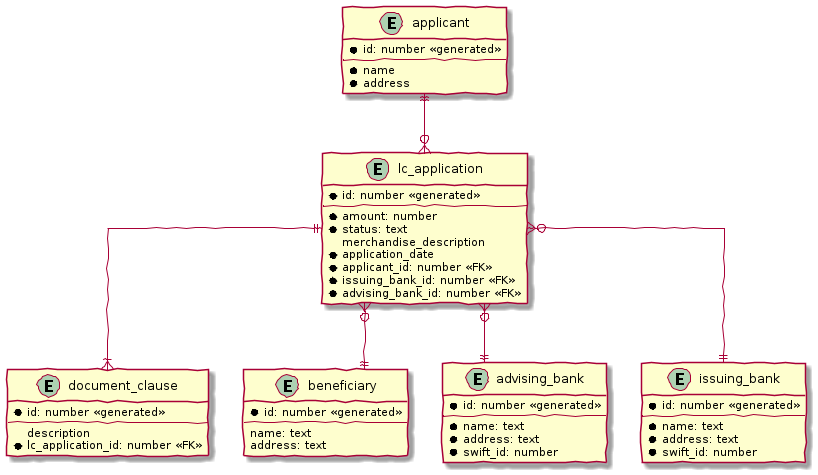

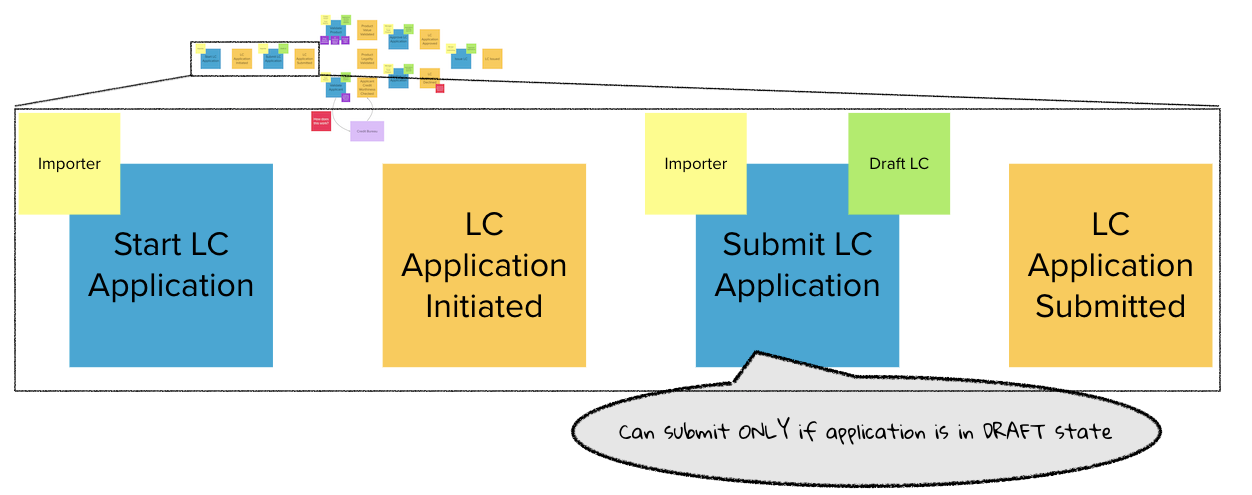

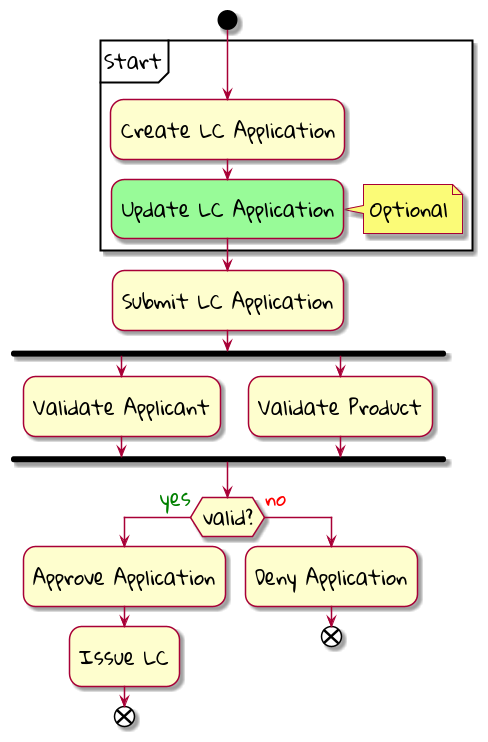

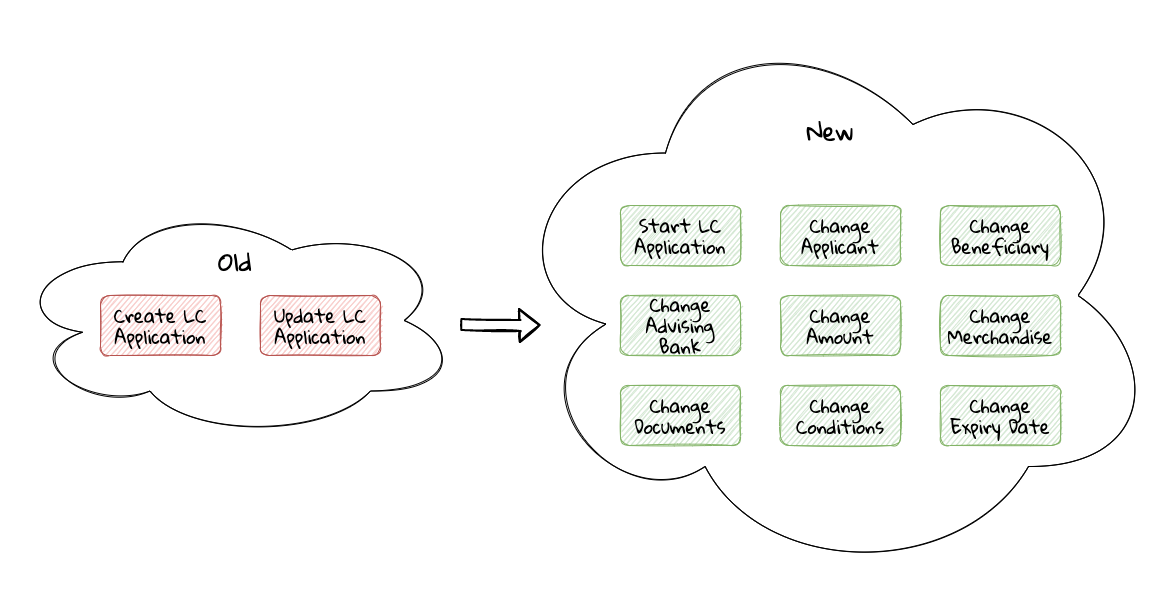

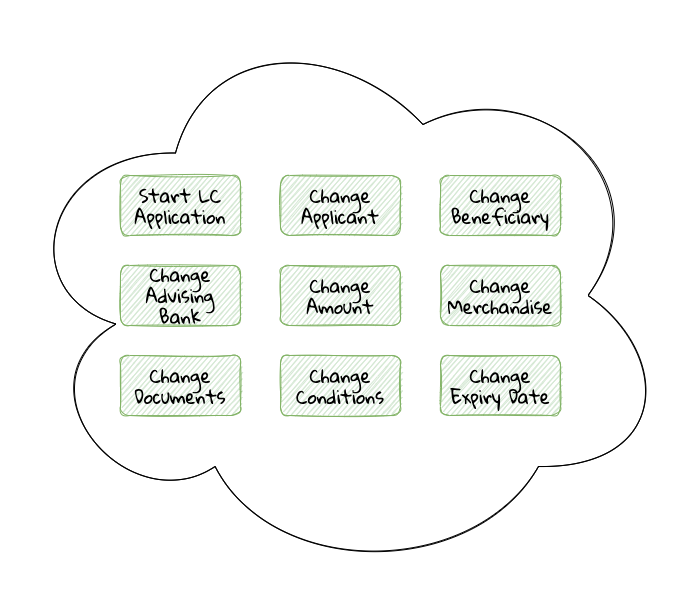

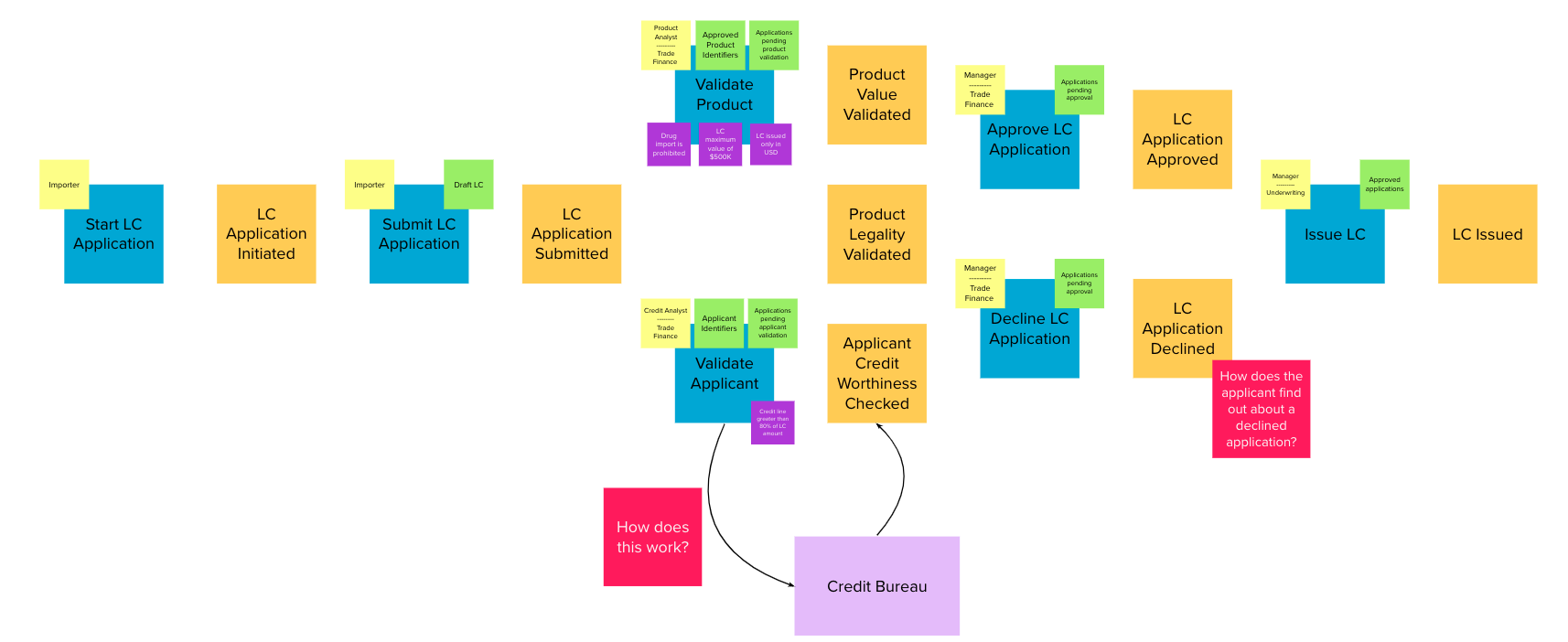

Chapter 4, Domain analysis and modeling, continues the analysis and modeling of the sample problem domain — Letter of Credit(LC) by using techniques like domain storytelling and eventstorming to arrive at a shared understanding of the problem and brainstorm ideas to arrive at a solution.

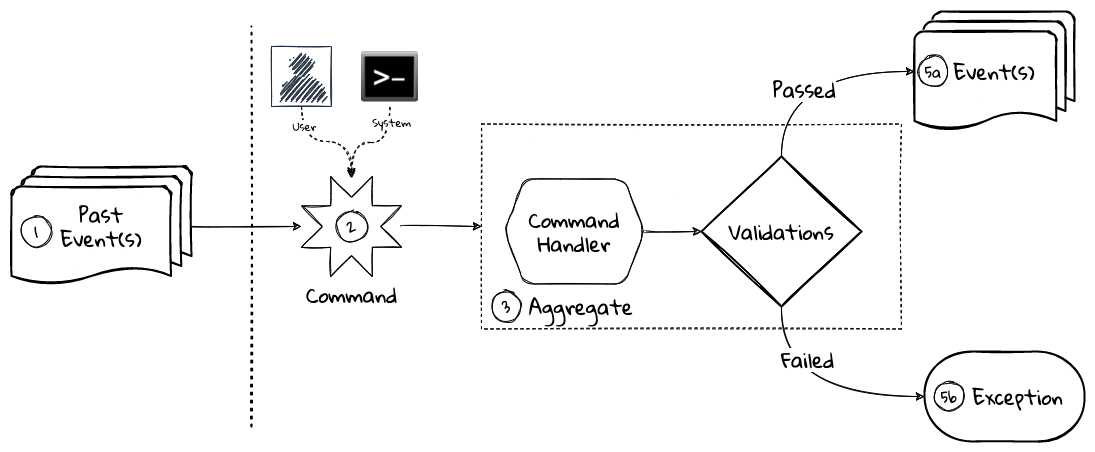

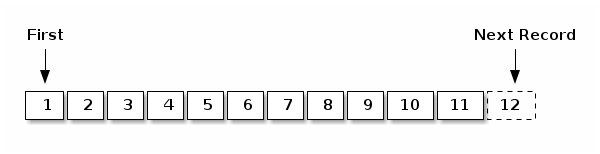

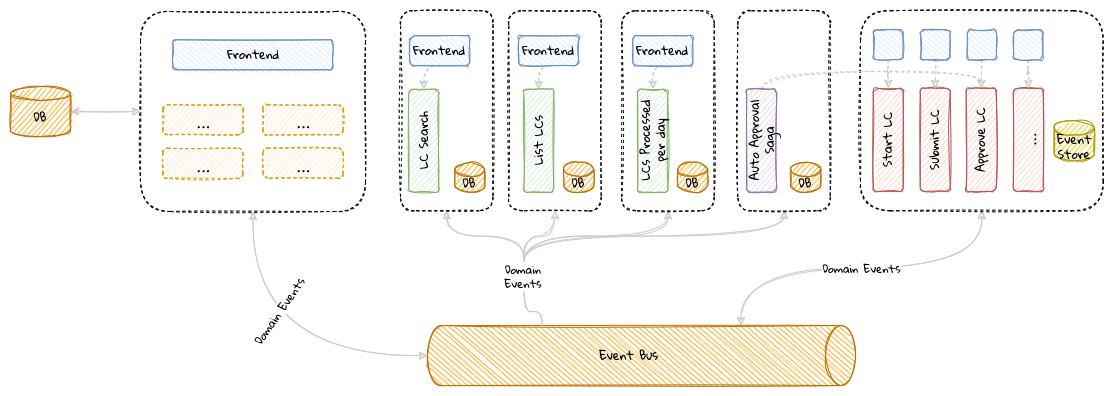

Chapter 5, Implementing domain logic, implements the command side API for the sample application. We look at how we can employ an event-driven architecture to build loosely coupled components. We will also look at how to implement structural and business validations and persistence options by contrasting between state-stored vs event-sourced aggregates.

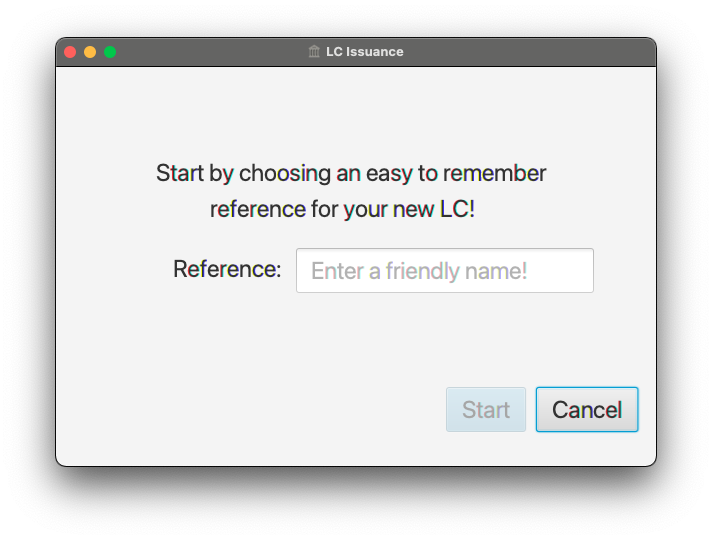

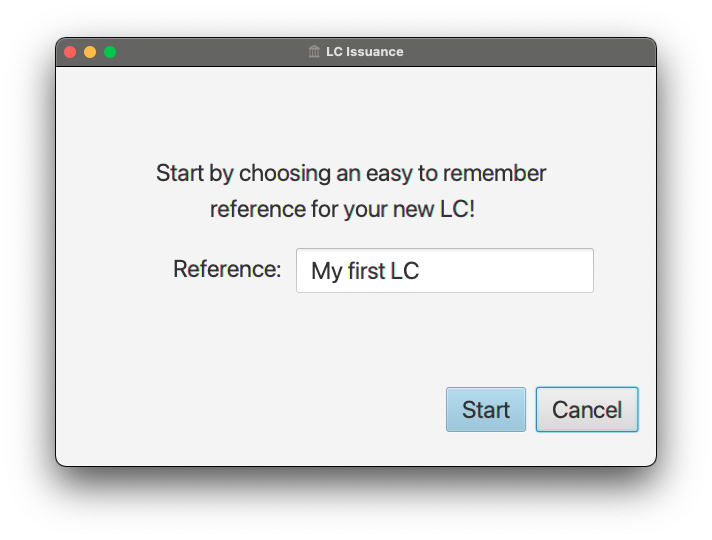

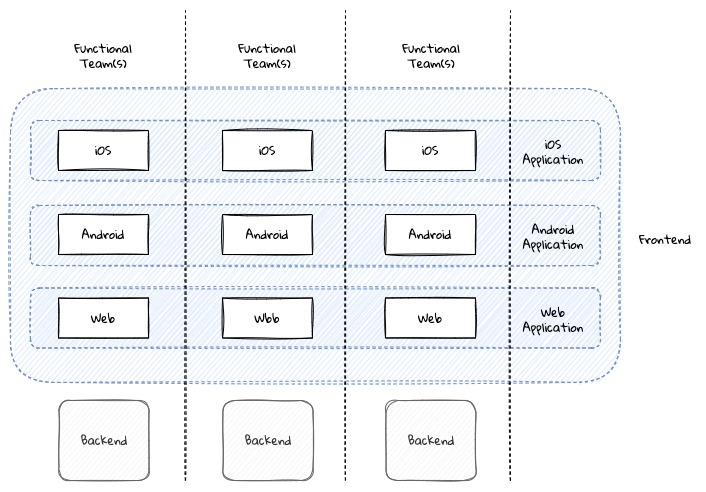

Chapter 6, Implementing the user interface – task-based, designs the UI for the sample application. We will also express expectations of the user interface to the service implementation.

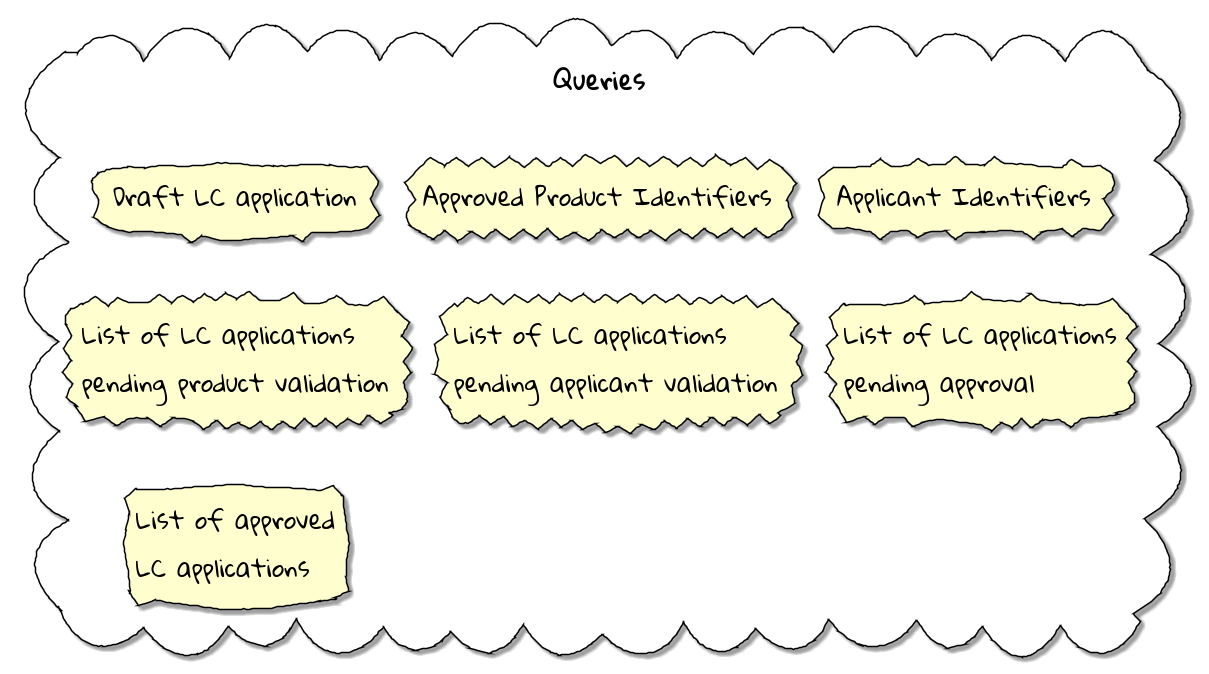

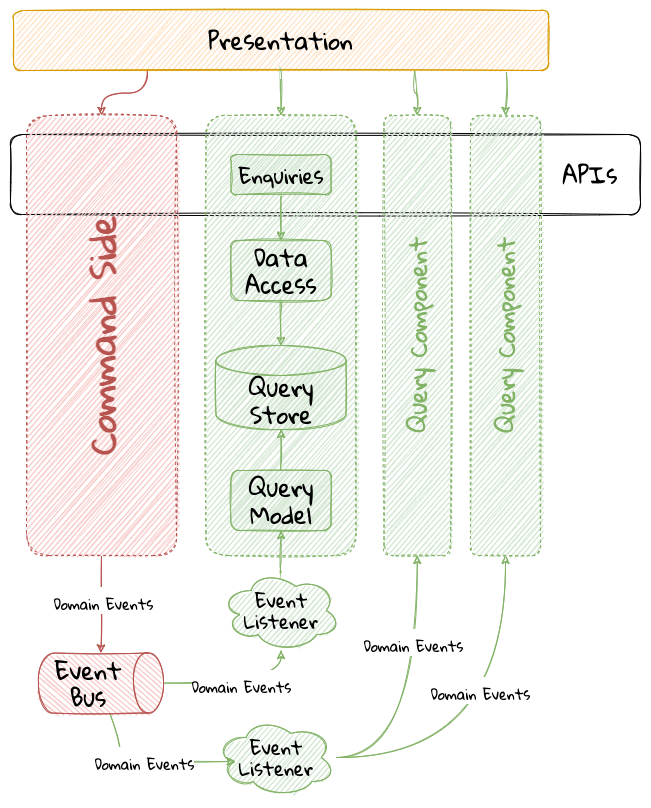

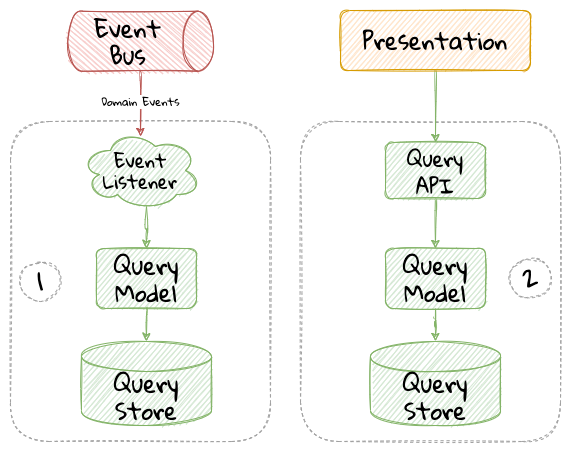

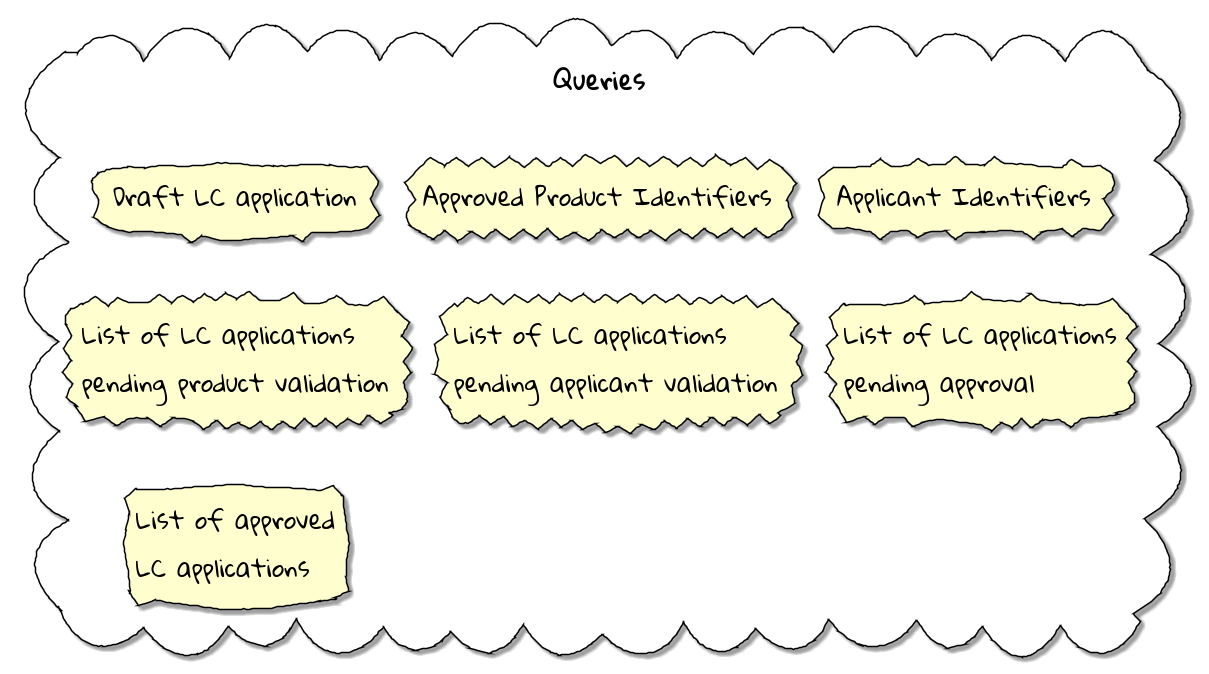

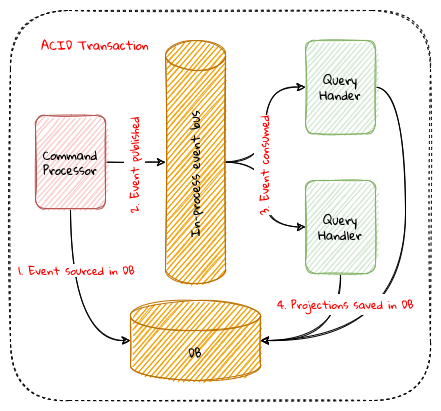

Chapter 7, Implementing queries, dives deeper into how we can construct read optimized representations of the data by listening to domain events. We will also look at persistence options for these read models.

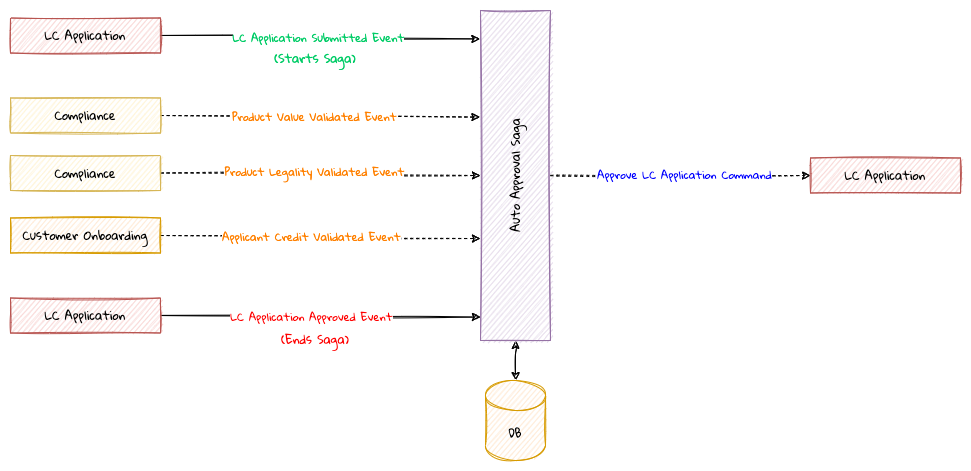

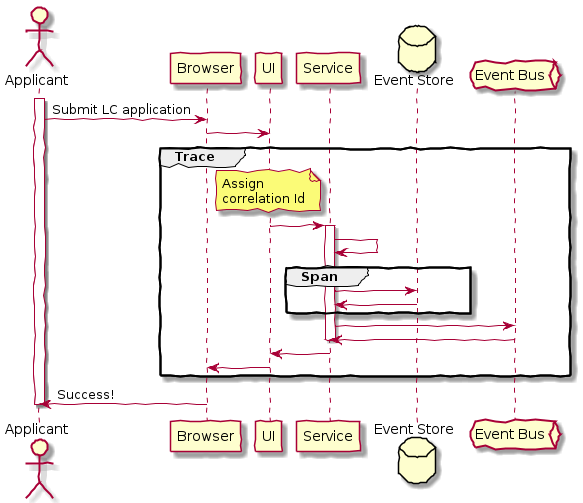

Chapter 8, Long-running workflows, looks at implementing both long-running user operations (sagas) and deadlines. We will also look at how we can keep track of the overall flow using log aggregation and distributed tracing. We will round off by looking at when/whether to choose explicit orchestration components of implicit choreography.

Chapter 9, Integrating with external systems, looks at integrating with other systems and bounded contexts. We will present the various styles of integration and the implications of choosing each of these.

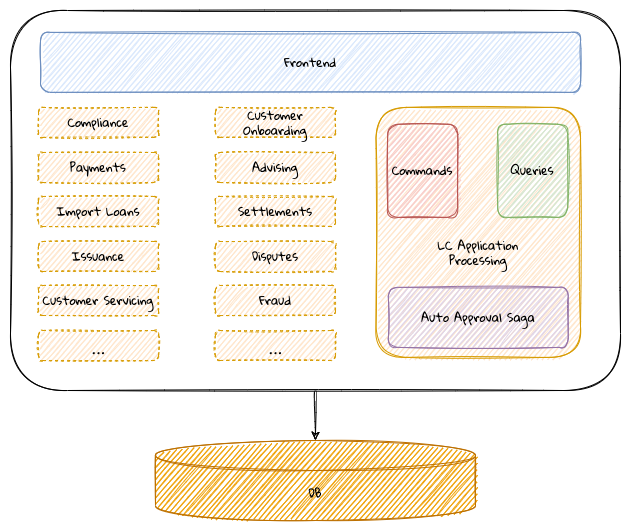

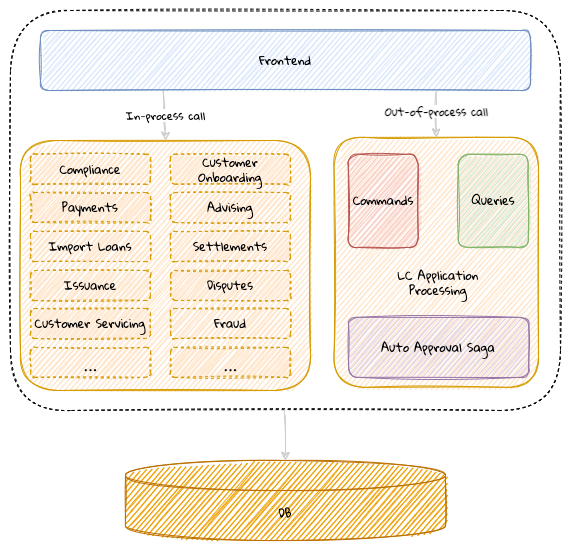

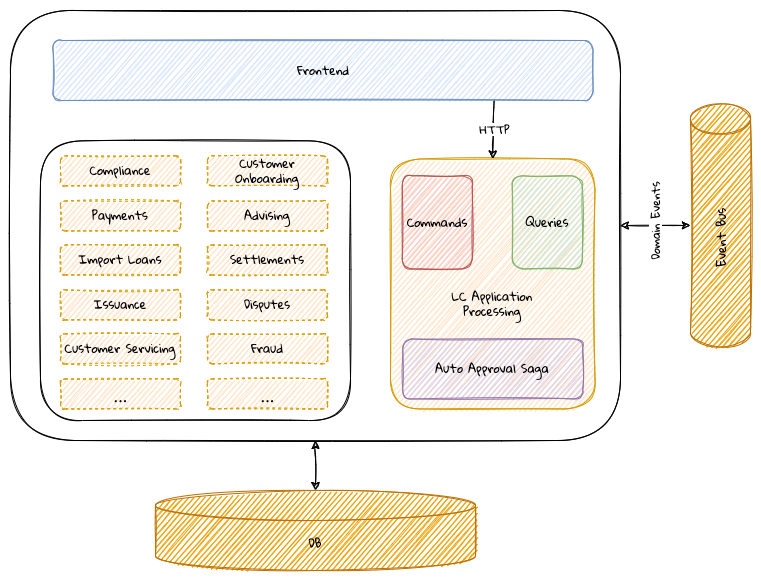

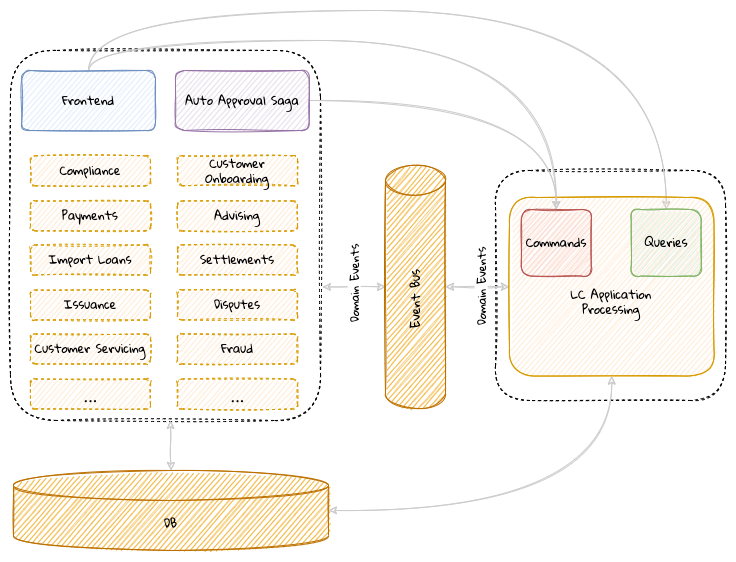

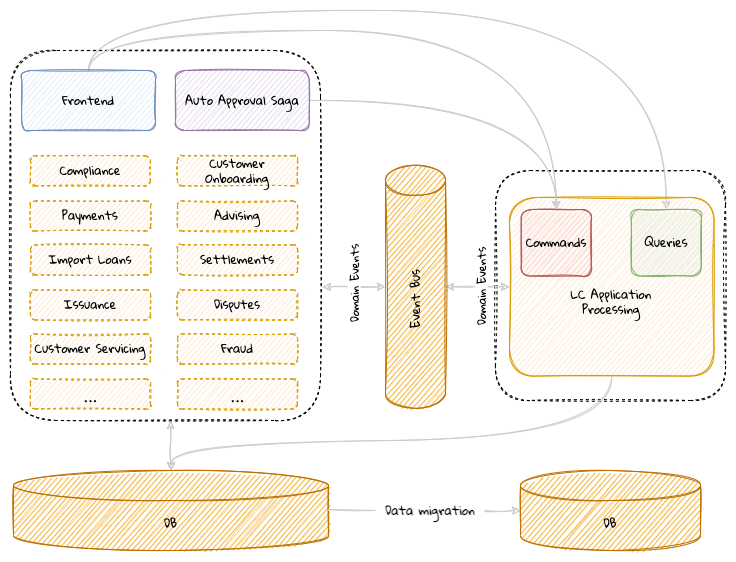

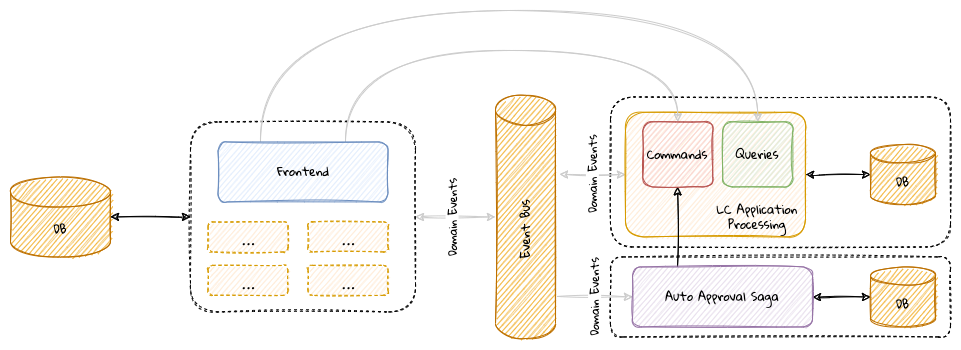

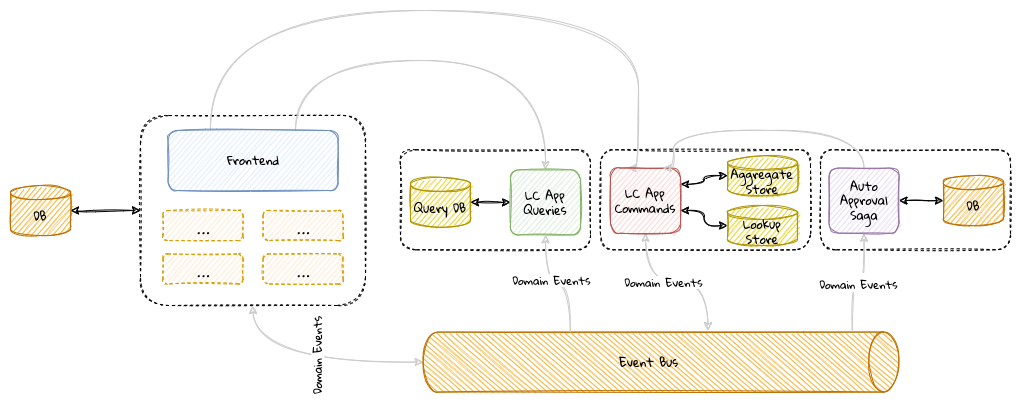

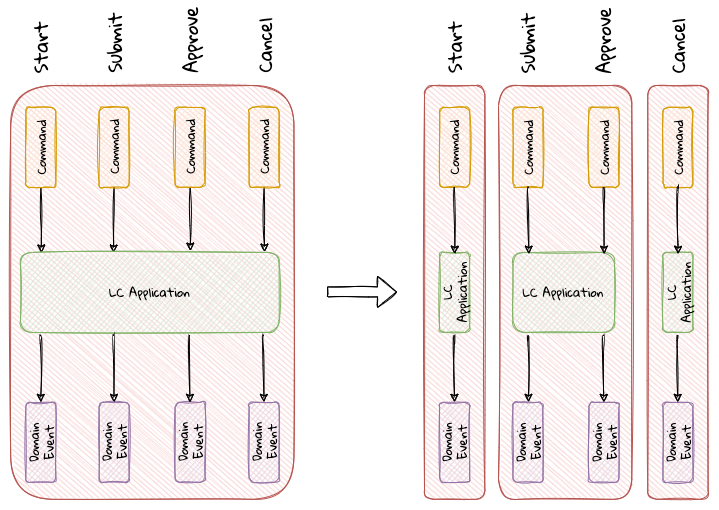

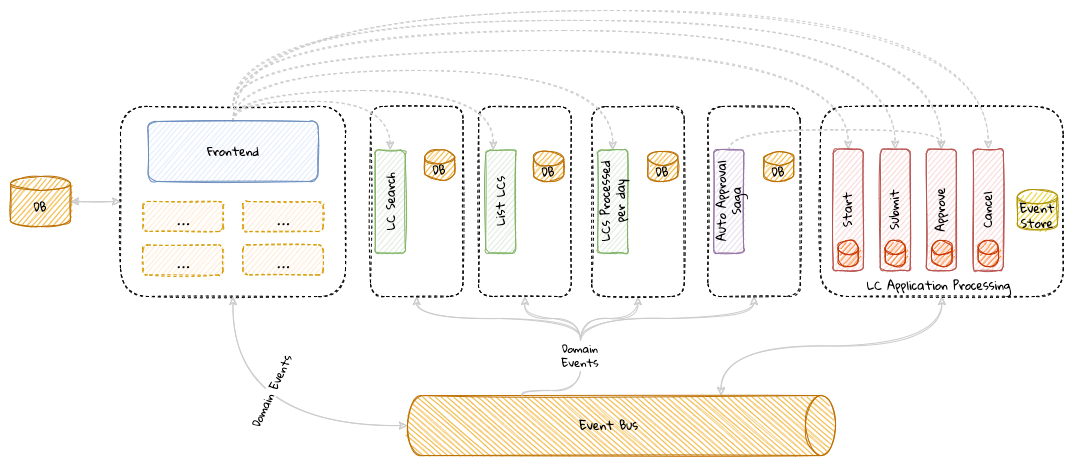

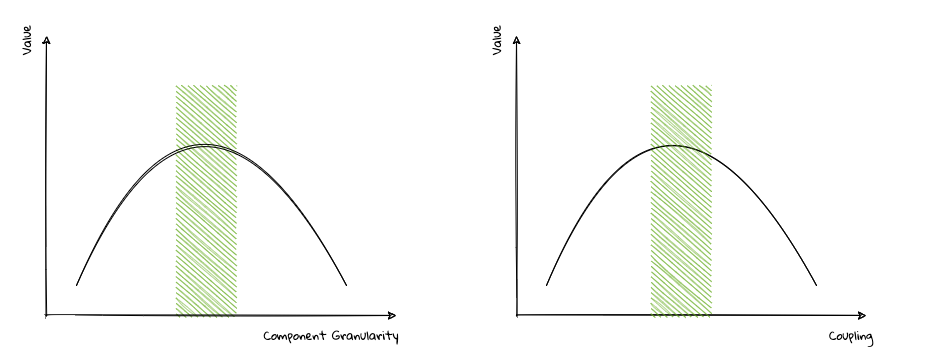

Chapter 10, Decomposing the monolith: Part 1, decomposes the command and the query side of the sample bounded context into distinct components. We will look at the tradeoffs involved when making these choices.

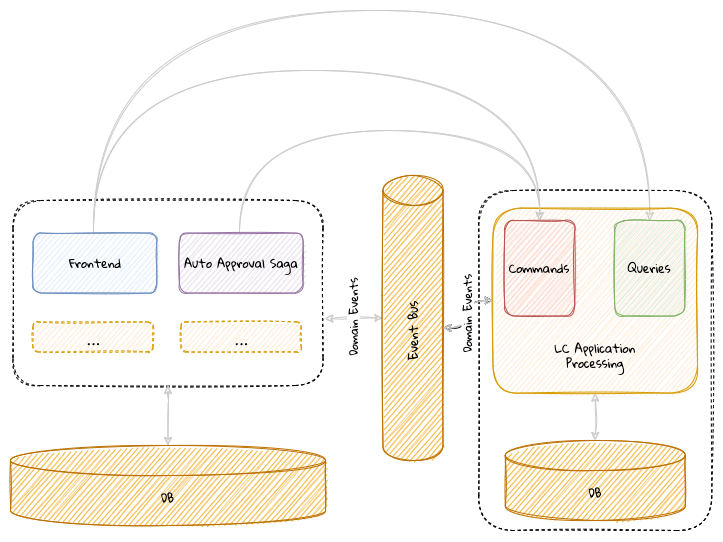

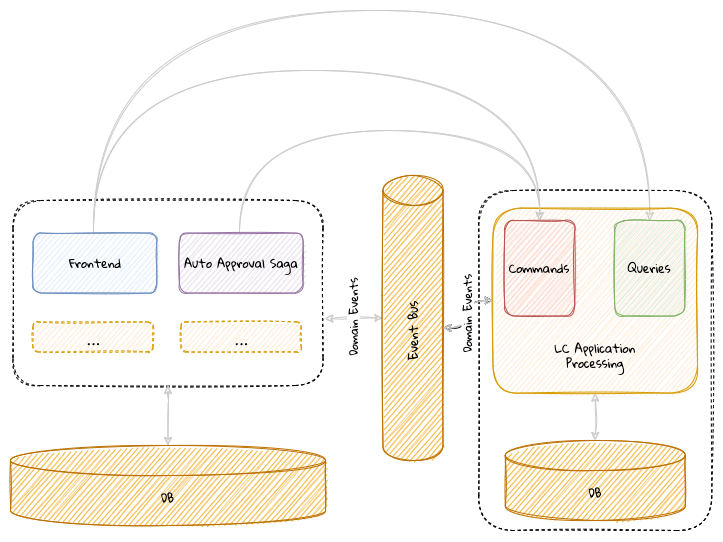

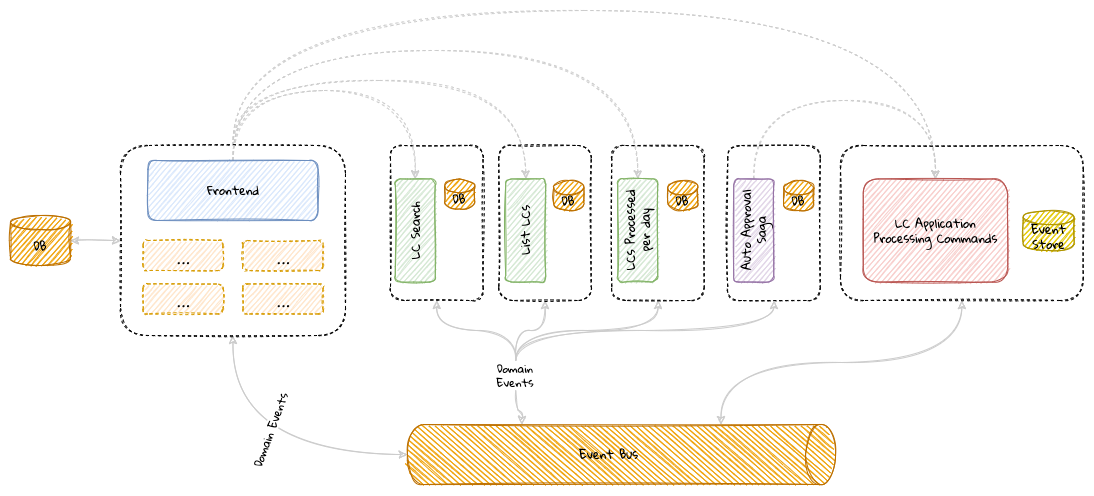

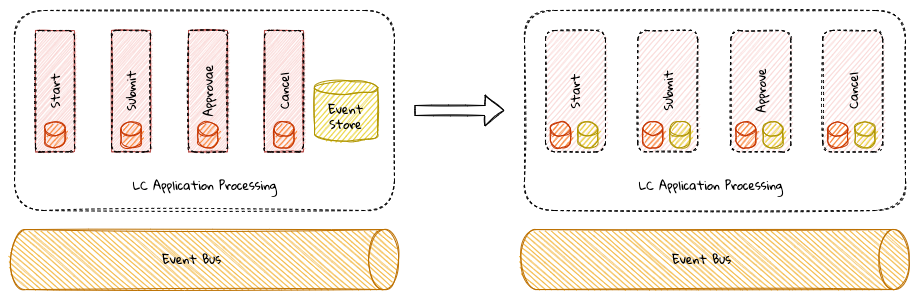

Chapter 11, Decomposing the monolith: Part 2, looks at finer-grained decomposition and the tradeoffs involved beyond the technical implications. We will decompose our application into distinct functions and discuss where it might be appropriate to draw the line.

Chapter 12, Beyond functional requirements, looks at factors beyond business requirements that can play a significant role in how applications are decomposed. Specifically, we will examine the effect that cross-functional requirements play when applying DDD.

About the authors

Premanand (Prem) Chandrasekaran is a technology leader and change agent with a solid track record of leading large technology teams and helping businesses deliver mission critical problems while exhibiting high internal and external quality. In the past two decades, he has had the pleasure of helping a variety of clients and domains ranging from financial services, online retailers, education, healthcare startups among others. His specialties include technical innovation, architecture, continuous delivery, agile/iterative transformation and employee development. When not fiddling with his trusty laptop, he spends time cutting vegetables, cooking, playing video games and analyzing the nuances of the game of cricket.

I would like to first and foremost thank my loving and patient wife Vidya and son Siddharth for their continued support, patience, and encouragement throughout the long process of writing this book. I would also like to thank my colleague and mentor Gagan Madan for constantly challenging me, pushing my limits and inspiring me to achieve greater heights. Finally, my co-author Karthik, without his perseverance and gentle prodding, it would have been very hard to finish this project. Lastly, my employer Thoughtworks for encouraging me to undertake this project and all my fellow Thoughtworkers for being a constant source of inspiration!

Karthik Krishnan is a technology leader with over 25 years of experience in designing and building large-scale enterprise solutions across financial and retail domains. He has played numerous technical roles in leading product development for major financial institutions. He is currently serving the role of Technical Principal at Thoughtworks. He is passionate about platform thinking, solution architecture, application security and strives to be known as a coding architect. His most recent assignment entailed leading a large technology team helping their clients in their legacy modernization journey with Cloud. When not working, he spends time practicing playing tunes on his musical keyboard.

I would like to thank my wife Suja and daughter Ananya for being my pillar of support, and in providing all the necessary encouragement, support, more importantly, for being very understanding, patient and accommodate of my long book writing sessions eating into their weekend plans. This book would not have been possible without them. And I would like to thank my friend, colleague and co-author Prem for providing the energy and bringing in new ideas for discussion and collaborating with me in encouraging healthy debates and discussions through the process of the creation of this book. Lastly, my employer Thoughtworks for providing me the space and encouraging me to write this book and all my colleagues at Thoughtworks for providing their valuable feedback through the course of this book writing journey. Page Break

About the reviewer

Vangos Pterneas helps innovative companies increase their revenue using motion technology and virtual reality. He is an expert in Kinect, HoloLens, Oculus Rift, and HTC Vive.

Microsoft has awarded him with the title of Most Valuable Professional for his technical contributions to the open source community. Vangos runs LightBuzz Inc, collaborating with clients from all over the world. He’s also the author of Getting Started with HTML5 WebSocket Programming and The Dark Art of Freelancing.

Part 1: Foundations

1. The rationale for domain-driven design

The being cannot be termed rational or virtuous, who obeys any authority, but that of reason.

1.1. Introduction

According to the Project Management Institute’s (PMI) Pulse of the Profession report published in February 2020, only 77% of all projects meet their intended goals — and even this is true only in the most mature organizations. For less mature organizations, this number falls to just 56% i.e. approximately one in every two projects does not meet its intended goals. Furthermore, approximately one in every five projects is declared an outright failure. At the same time, we also seem to be embarking on our most ambitious and complex projects.

In this chapter, we will examine the main causes for project failure and look at how applying domain-driven design provides a set of guidelines and techniques to improve the odds of success in our favor. While Eric Evans wrote his classic book on the subject way back in 2003, we look at why that work is still extremely relevant in today’s times.

1.2. Why do software projects fail?

Failure is simply the opportunity to begin again, this time more intelligently.

According to the project success report published in the Project Management Journal of the PMI, the following six factors need to be true for a project to be deemed successful:

| Category | Criterion | Description |

|---|---|---|

Project |

Time |

It meets the desired time schedules |

Cost |

Its cost does not exceed budget |

|

Performance |

It works as intended |

|

Client |

Use |

Its intended clients use it |

Satisfaction |

Its intended clients are happy |

|

Effectiveness |

Its intended clients derive direct benefits through its implementation |

With all of these criteria being applied to assess project success, a large percentage of projects fail for one reason or another. Let’s examine some of the top reasons in more detail:

1.2.1. Inaccurate requirements

PMI’s Pulse of the Profession report from 2017 highlights a very starking fact — a vast majority of projects fail due to inaccurate or misinterpreted requirements. It follows that it is impossible to build something that clients can use, are happy with and makes them more effective at their jobs if the wrong thing gets built — even much less for the project to be built on time, and under budget.

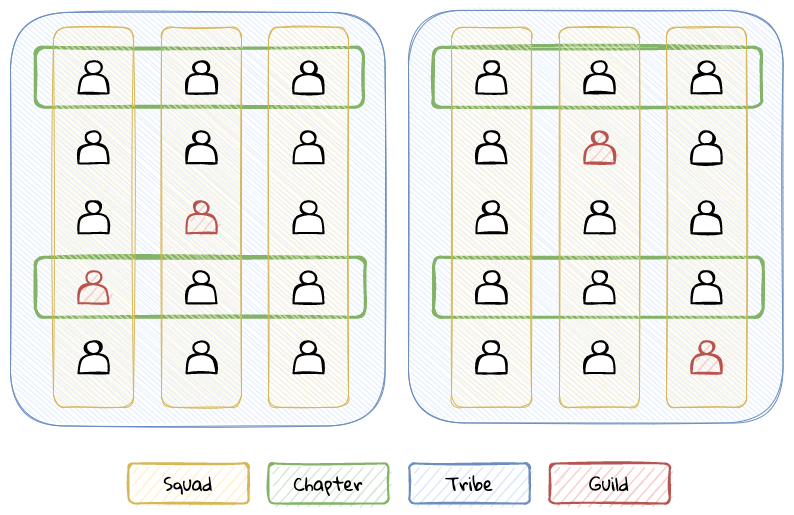

IT teams, especially in large organizations are staffed with mono-skilled roles such as UX designer, developer, tester, architect, business analyst, project manager, product owner, business sponsor, etc. In a lot of cases, these people are parts of distinct organization units/departments — each with its own set of priorities and motivations. To make matters even worse, the geographical separation between these people only keeps increasing. The need to keep costs down and the current COVID-19 ecosystem does not help matters either.

All this results in a loss in fidelity of information at every stage in the assembly line, which then results in misconceptions, inaccuracies, delays and eventually failure!

1.2.2. Too much architecture

Writing complex software is quite a task. One cannot just hope to sit down and start typing code — although that approach might work in some trivial cases. Before translating business ideas into working software, a thorough understanding of the problem at hand is necessary. For example, it is not possible (or at least extremely hard) to build credit card software without understanding how credit cards work in the first place. To communicate one’s understanding of a problem, it is not uncommon to create software models of the problem, before writing code. This model or collection of models represents the understanding of the problem and the architecture of the solution.

Efforts to create a perfect model of the problem — one that is accurate in a very broad context, are not dissimilar to the proverbial holy grail quest. Those accountable to produce the architecture can get stuck in analysis paralysis and/or big design up front, producing artifacts that are one or more of too high level, wishful, gold-plated, buzzword-driven, disconnected from the real world — while not solving any real business problems. This kind of lock-in can be especially detrimental during the early phases of the project when knowledge levels of team members are still up and coming. Needless to say, projects adopting such approaches find it hard to meet with success consistently.

| For a more comprehensive list of modeling anti-patterns, refer to Scott W. Ambler’s website (http://agilemodeling.com) and book dedicated to the subject. |

1.2.3. Too little architecture

Agile software delivery methods manifested themselves in the late 90s, early 2000s in response to heavyweight processes collectively known as waterfall. These processes seemed to favor big design up front and abstract ivory tower thinking based on wishful, ideal world scenarios. This was based on the premise that thinking things out well in advance ends up saving serious development headaches later on as the project progresses.

In contrast, agile methods seem to favor a much more nimble and iterative approach to software development with a high focus on working software over other artifacts such as documentation. Most teams these days claim to practice some form of iterative software development. However, this obsession to claim conformance to a specific family of agile methodologies as opposed to the underlying principles, a lot of teams misconstrue having just enough architecture with having no perceptible architecture. This results in a situation where adding new features or enhancing existing ones takes a lot longer than what it previously used to — which then accelerates the devolution of the solution to become the dreaded big ball of mud.

1.2.4. Excessive incidental complexity

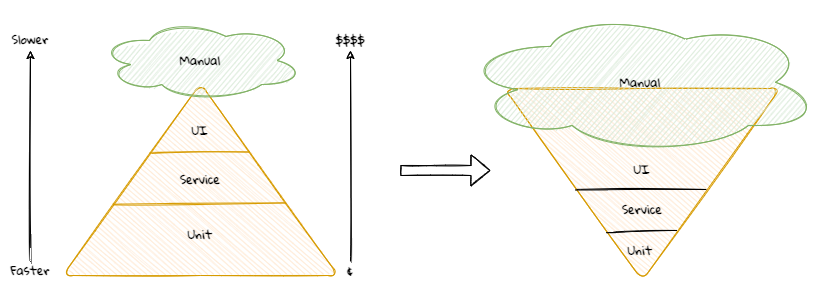

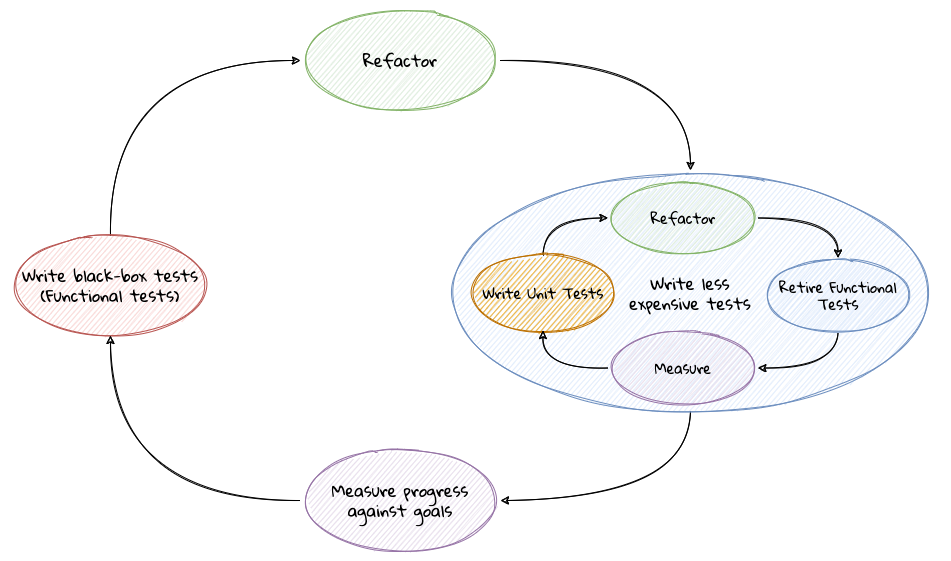

Mike Cohn popularized the notion of the test pyramid where he talks about how a large number of unit tests should form the foundation of a sound testing strategy — with numbers decreasing significantly as one moves up the pyramid. The rationale here is that as one moves up the pyramid, the cost of upkeep goes up copiously while speed of execution slows down manifold. In reality though, a lot of teams seem to adopt a strategy that is the exact opposite of this — known as the testing ice cream cone as depicted below:

The testing ice cream cone is a classic case of what Fred Brooks calls incidental complexity in his seminal paper titled No Silver Bullet — Essence and Accident in Software Engineering. All software has some amount of essential complexity that is inherent to the problem being solved. This is especially true when creating solutions for non-trivial problems. However, incidental or accidental complexity is not directly attributable to the problem itself — but is caused by limitations of the people involved, their skill levels, the tools and/or abstractions being used. Not keeping tabs on incidental complexity causes teams to veer away from focusing on the real problems, solving which provide the most value. It naturally follows that such teams minimize their odds of success appreciably.

1.2.5. Uncontrolled technical debt

Financial debt is the act of borrowing money from an outside party to quickly finance the operations of a business — with the promise to repay the principal plus the agreed upon rate of interest in a timely manner. Under the right circumstances, this can accelerate the growth of a business considerably while allowing the owner to retain ownership, reduced taxes and lower interest rates. On the other hand, the inability to pay back this debt on time can adversely affect credit rating, result in higher interest rates, cash flow difficulties, and other restrictions.

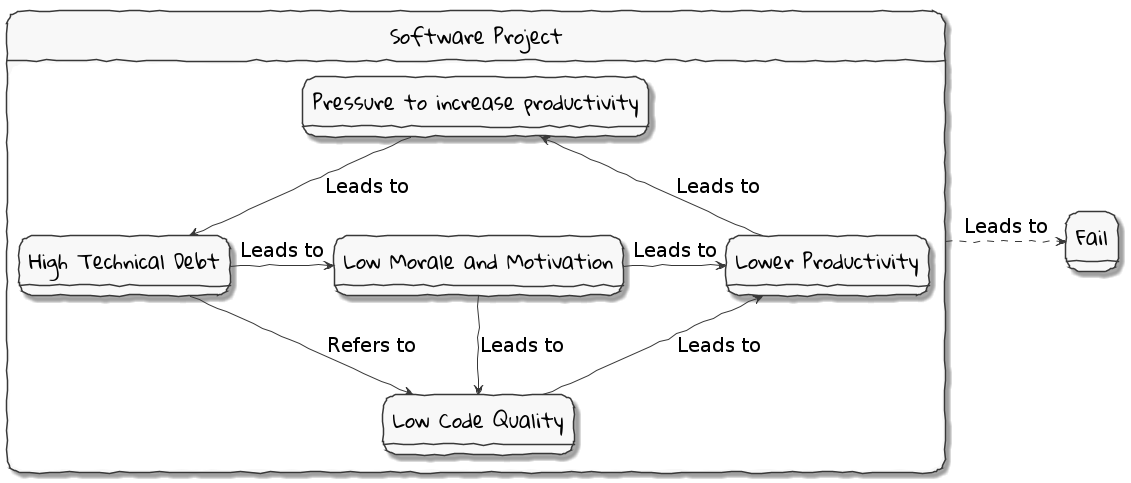

Technical debt is what results when development teams take arguably sub-optimal actions to expedite the delivery of a set of features or projects. For a period of time, just like borrowed money allows you to do things sooner than you could otherwise, technical debt can result in short term speed. In the long term, however, software teams will have to dedicate a lot more time and effort towards simply managing complexity as opposed to thinking about producing architecturally sound solutions. This can result in a vicious negative cycle as illustrated in the diagram below:

In a recent McKinsey survey sent out to CIOs, around 60% reported that the amount of tech debt increased over the past three years. At the same time, over 90% of CIOs allocated less than a fifth of their tech budget towards paying it off. Martin Fowler explores the deep correlation between high software quality (or the lack thereof) and the ability to enhance software predictably. While carrying a certain amount of tech debt is inevitable and part of doing business, not having a plan to systematically pay off this debt can have significantly detrimental effects on team productivity and ability to deliver value.

1.2.6. Ignoring Non-Functional Requirements (NFRs)

Stakeholders often want software teams to spend a majority (if not all) of their time working on features that provide enhanced functionality. This is understandable given that such features provide the highest ROI. These features are called functional requirements.

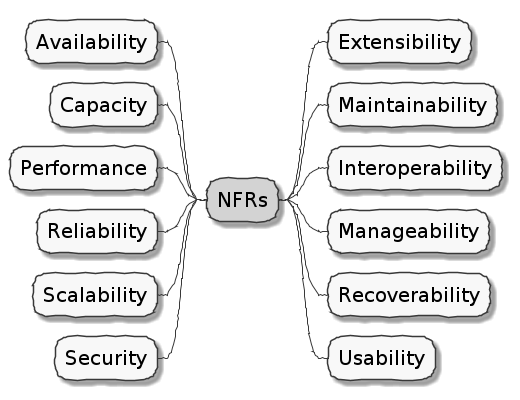

Non-functional requirements (also sometimes known as cross-functional requirements), on the other hand, are those aspects of the system that do not affect functionality directly, but have a profound effect on the efficacy of those using these using and maintaining these systems. There are many kinds of NFRs. A partial list of common NFRs is depicted below:

Very rarely do users explicitly request non-functional requirements, but almost always expect these features to be part of any system they use. Oftentimes, systems may continue to function without NFRs being met, but not without having an adverse impact on the quality of the user experience. For example, the home page of a web site that loads in under 1 second under low load and takes upwards of 30 seconds under higher loads may not be usable during those times of stress. Needless to say, not treating non-functional requirements with the same amount of rigor as explicit, value-adding functional features, can lead to unusable systems — and subsequently failure.

In this section we examined some common reasons that cause software projects to fail. Is it possible to improve our odds? Before we do that, let’s look at the nature of modern software systems and how we can deal with the ensuing complexity.

1.3. Modern systems and dealing with complexity

We can not solve our problems with the same level of thinking that created them.

As we have seen in the previous section, there are several reasons that cause software endeavors to fail. In this section, we will look to understand how software gets built, what the currently prevailing realities are and what adjustments we need to make in order to cope.

1.3.1. How software gets built

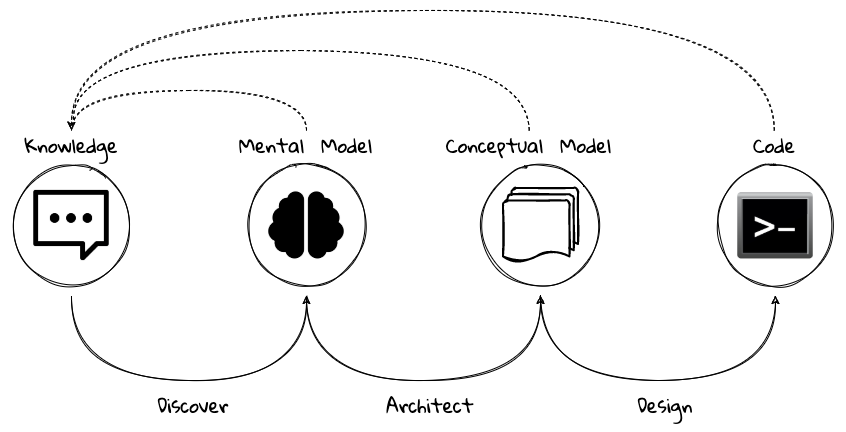

Building successful software is an iterative process of constantly refining knowledge and expressing it in the form of models.We have attempted to capture the essence of the process at a high level here:

Before we express a solution in working code, it is necessary to understand what the problem entails, why the problem is important to solve, and finally, how it can be solved.Irrespective of the methodology used (waterfall, agile, and/or anything in between), the process of building software is one where we need to constantly use our knowledge to refine mental/conceptual models to be able to create valuable solutions.

1.3.2. Complexity is inevitable

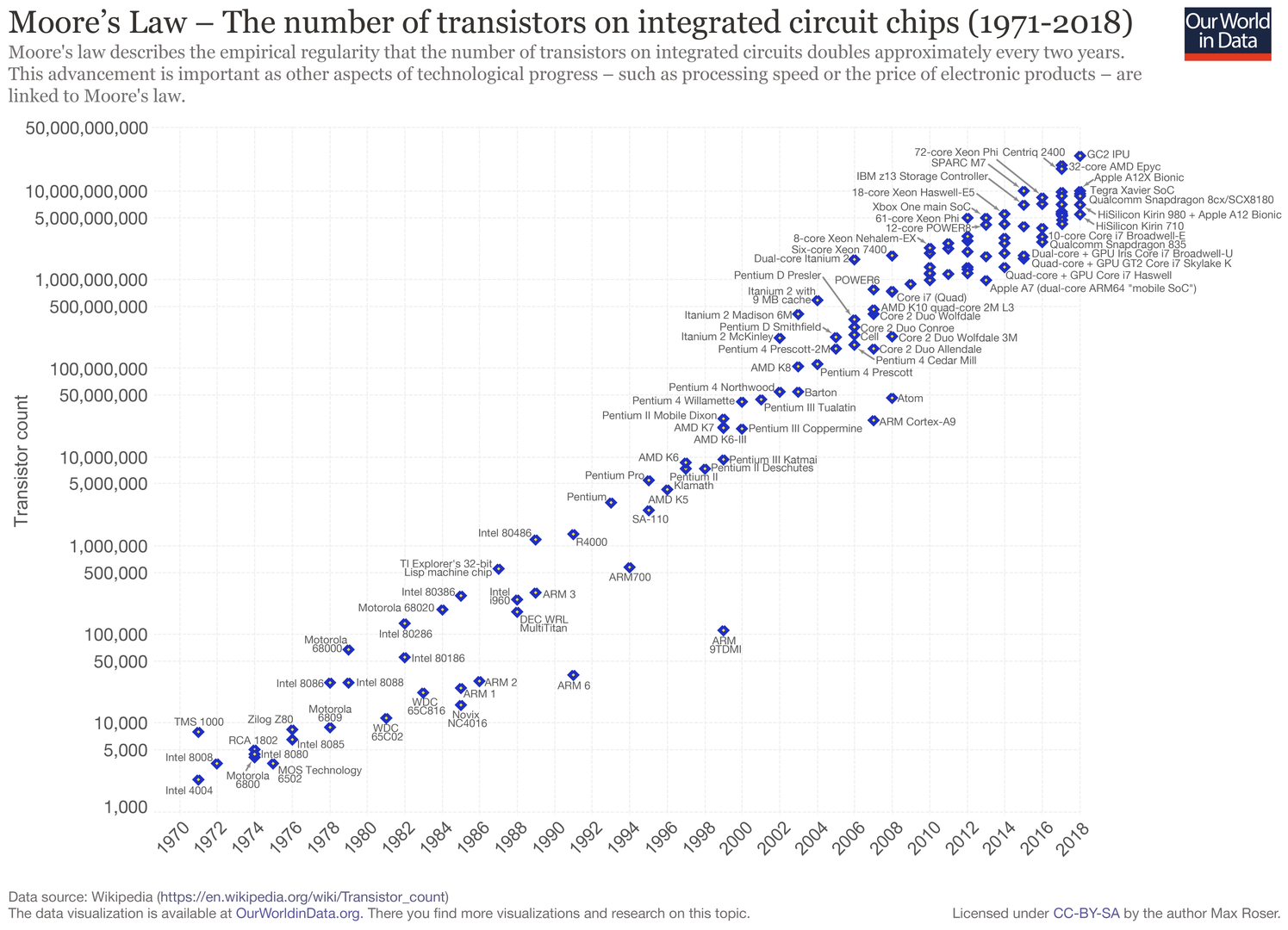

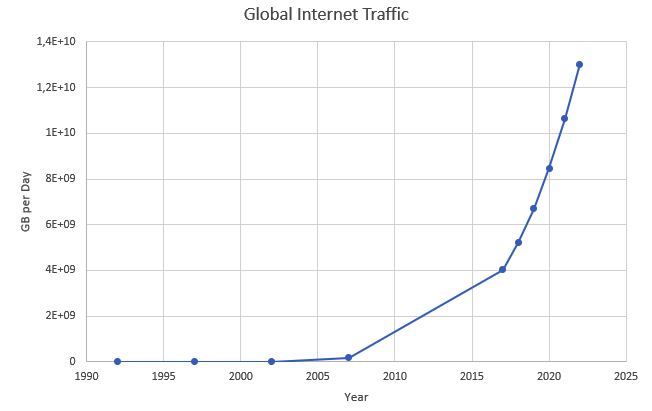

We find ourselves in the midst of the fourth industrial revolution where the world is becoming more and more digital — with technology being a significant driver of value for businesses.Exponential advances in computing technology as illustrated by Moore’s Law below,

along with the rise of the internet as illustrated below,

has meant that companies are being required to modernize their software systems much more rapidly than they ever have. Along with all this, the onset of commodity computing services such as the public cloud has led to a move away from expensive centralized computing systems to more distributed computing ecosystems. As we attempt building our most complex solutions, monoliths are being replaced by an environ of distributed, collaborating microservices. Modern philosophies and practices such as automated testing, architecture fitness functions, continuous integration, continuous delivery, devops, security automation, infrastructure as code, to name a few, are disrupting the way we deliver software solutions.

All these advances introduce their own share of complexity. Instead of attempting to control the amount of complexity, there is a need to embrace and cope with it.

1.3.3. Optimizing the feedback loop

As we enter an age of encountering our most complex business problems, we need to embrace new ways of thinking, a development philosophy and an arsenal of techniques to iteratively evolve mature software solutions that will stand the test of time. We need better ways of communicating, analyzing problems, arriving at a collective understanding, creating and modeling abstractions, and then implementing, enhancing the solution.

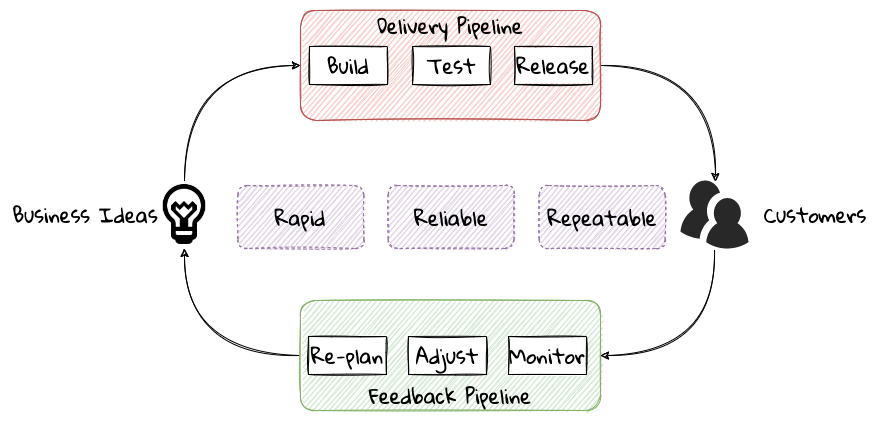

To state the obvious — we’re all building software with seemingly brilliant business ideas on one side and our ever-demanding customers on the other, as shown here:

In between, we have two chasms to cross — the delivery pipeline and the feedback pipeline. The delivery pipeline enables us to put software in the hands of our customers, whereas the feedback pipeline allows us to adjust and adapt. As we can see, this is a continuum. And if we are to build better, more valuable software, this continuum, this potentially infinite loop has to be optimized!

To optimize this loop, we need three characteristics to be present: we need to be fast, we need to be reliable, and we need to do this over and over again. In other words, we need to be rapid, reliable and repeatable — all at the same time!! Take any one of these away, and it just won’t sustain.

Domain-driven design promises to provide answers on how to do this in a systematic manner. In the upcoming section, and indeed the rest of this book, we will examine what DDD is and why it is indispensable when working to provide solutions for non-trivial problems in today’s world of massively distributed teams and applications.

1.4. What is Domain-Driven Design?

Life is really simple, but we insist on making it complicated.

In the previous section, we saw how a myriad of reasons coupled with system complexity get in the way of software project success. The idea of domain-driven design, originally conceived by Eric Evans in his 2003 book, is an approach to software development that focuses on expressing software solutions in the form of a model that closely embodies the core of the problem being solved. It provides a set of principles and systematic techniques to analyze, architect and implement software solutions in a manner that enhances chances of success.

While Evans' work is indeed seminal, ground-breaking, and way ahead of its time, it is not prescriptive at all. This is a strength in that it has enabled evolution of DDD beyond what Evans had originally conceived at the time. On the other hand, it also makes it extremely hard to define what DDD actually encompasses, making practical application a challenge. In this section, we will look at some foundational terms and concepts behind domain-driven design. Elaboration and practical application of these concepts will happen in upcoming chapters of this book.

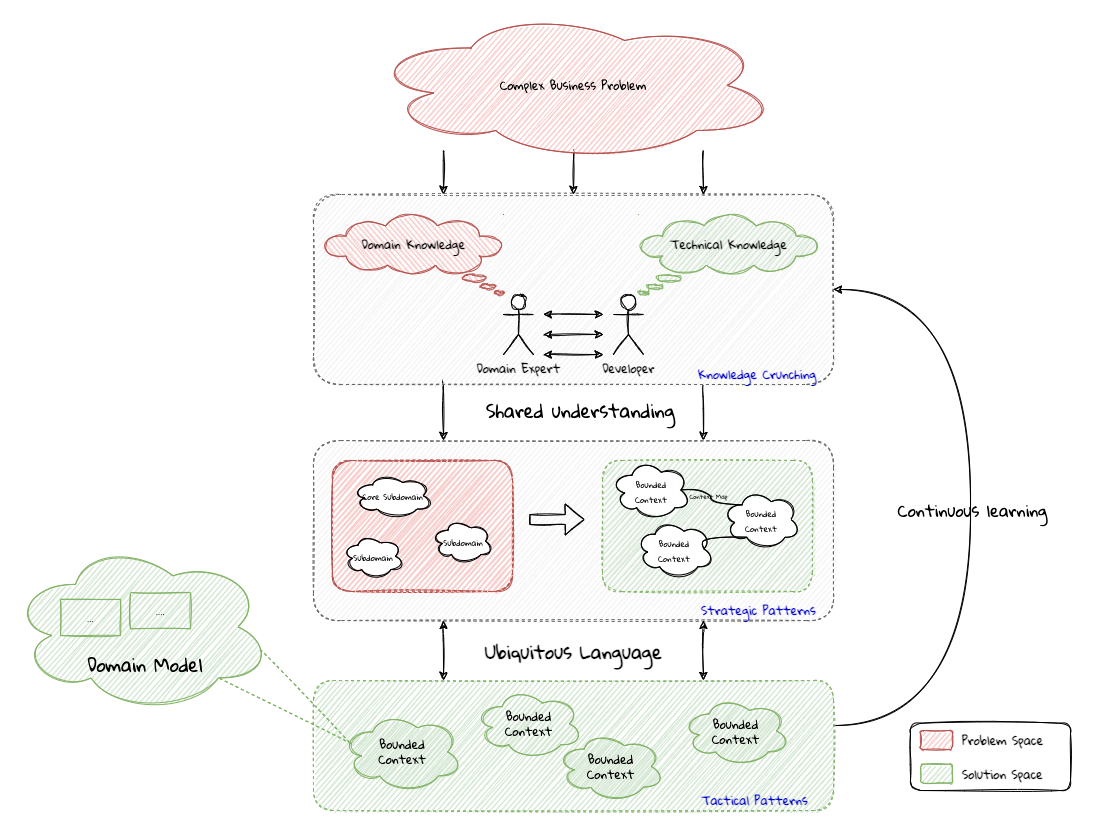

When encountered with a complex business problem:

-

Understand the problem: To have a deep, shared understanding of the problem, it is necessary for business experts and technology experts to collaborate closely. Here we collectively understand what the problem is and why it is valuable to solve. This is termed as the domain for the problem.

-

Break down the problem into more manageable parts: To keep complexity at manageable levels, break down complex problems into smaller, independently solvable parts. These parts are termed as subdomains. It may be necessary to further break down subdomains where the subdomain is still too complex. Assign explicit boundaries to limit the functionality of each subdomain. This boundary is termed as the bounded context for that subdomain. It may also be convenient to think of the subdomain as a concept that makes more sense to the domain experts (in the problem space), whereas the bounded context is a concept that makes more sense to the technology experts (in the solution space).

-

For each of these bounded contexts:

-

Agree on a shared language: Formalize the understanding by establishing a shared language that is applicable unambiguously within the bounds of the subdomain. This shared language is termed as the ubiquitous language of the domain.

-

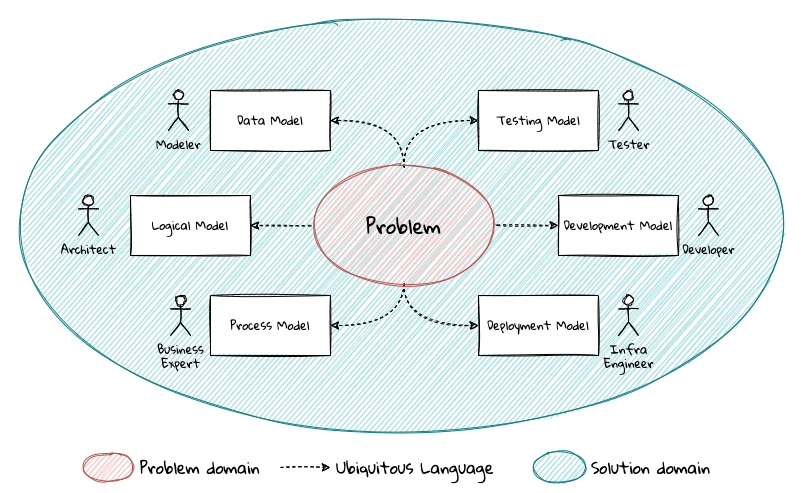

Express understanding in shared models: In order to produce working software, express the ubiquitous language in the form of shared models. This model is termed as the domain model. There may exist multiple variations of this model, each meant to clarify a specific aspect of the solution. For example, a process model, a sequence diagram, working code, a deployment topology, etc.

-

-

Embrace incidental complexity of the problem: It is important to note that it is not possible to shy away from the essential complexity of a given problem. By breaking down the problem into subdomains and bounded contexts, we are attempting to distribute it (more or less) evenly across more manageable parts.

-

Continuously evolve for greater insight: It is important to understand that the above steps are not a one-time activity. Businesses, technologies, processes and our understanding of these evolve, it is important for our shared understanding to remain in sync with these models through continuous refactoring.

A pictorial representation of the essence of domain-driven design is expressed here:

We appreciate that this is quite a whirlwind introduction to the subject of domain-driven design.

1.4.1. Understanding the problem using strategic design

In this section, let’s demystify some commonly used concepts and terms when working with domain-driven design. First and foremost, we need to understand what we mean by the first "D" — domain.

What is a domain?

The foundational concept when working with domain-driven design is the notion of a domain. But what exactly is a domain?The word domain, which has its origins in the 1600s to the Old French word domaine (power), Latin word dominium (property, right of ownership) is a rather confusing word. Depending on who, when, where and how it is used, it can mean different things:

In the context of a business however, the word domain covers the overall scope of its primary activity — the service it provides to its customers. This is also referred as the problem domain.For example, Tesla operates in the domain of electric vehicles, Netflix provides online movies and shows, while McDonald’s provides fast food. Some companies like Amazon, provide services in more than one domain — online retail, cloud computing, among others. The domain of a business (at least the successful ones) almost always encompasses fairly complex and abstract concepts. To cope with this complexity, it is usual to decompose these domains into more manageable pieces called subdomains. Let us understand subdomains in more detail next.

What is a subdomain?

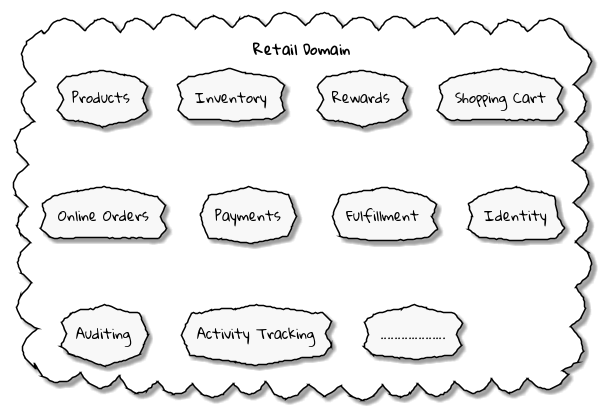

At its essence, Domain-driven design provides means to tackle complexity. Engineers do this by breaking down complex problems into more manageable ones called subdomains.This facilitates better understanding and makes it easier to arrive at a solution. For example, the online retail domain may be divided into subdomains such as product, inventory, rewards, shopping cart, order management, payments, shipping, etc. as shown below:

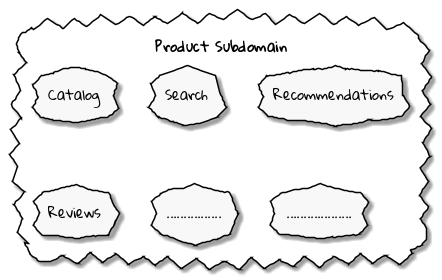

In certain businesses, subdomains themselves may turn out to become very complex on their own and may require further decomposition. For instance, in the retail example above, it may be required to break the products subdomain into further constituent subdomains such as catalog, search, recommendations, reviews, etc. as shown below:

Further breakdown of subdomains may be needed until we reach a level of manageable complexity. Domain decomposition is an important aspect of DDD. Let’s look at the types of subdomains to understand this better.

| The terms domain and subdomains tend to get used interchangeably quite often. This can be confusing to the casual onlooker. Given that sub(domains) tend to be quite complex and hierarchical, a subdomain can be a domain in its own right. |

Types of subdomains

Breaking down a complex domain into more manageable subdomains is a great thing to do. However, not all subdomains are created equal. With any business, the following three types of subdomains are going to be encountered:

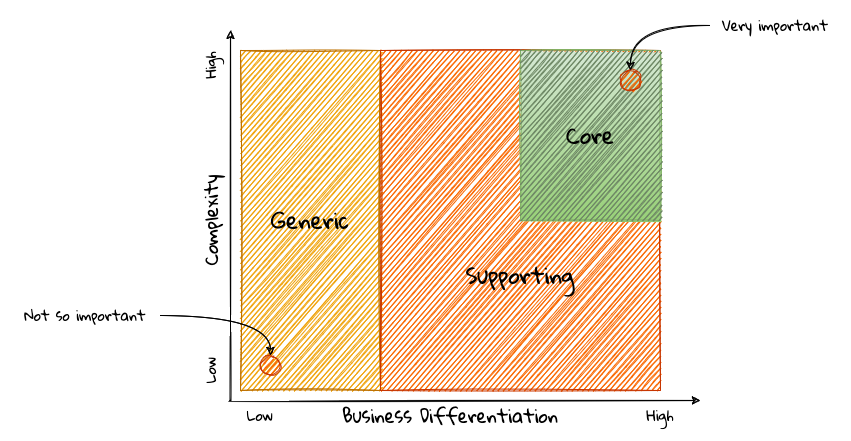

-

Core: The main focus area for the business. This is what provides the biggest differentiation and value. It is therefore natural to want to place the most focus on the core subdomain. In the retail example above, shopping cart and orders might be the biggest differentiation — and hence may form the core subdomains for that business venture. It is prudent to implement core subdomains in-house given that it is something that businesses will desire to have the most control over. In the online retail example above, the business may want to focus on providing an enriched experience to place online orders. This will make the online orders and shopping cart part of the core subdomain.

-

Supporting: Like with every great movie, where it is not possible to create a masterpiece without a solid supporting cast, so it is with supporting or auxiliary subdomains. Supporting subdomains are usually very important and very much required, but may not be the primary focus to run the business. These supporting subdomains, while necessary to run the business, do not typically offer a significant competitive advantage. Hence, it might be even fine to completely outsource this work or use an off-the-shelf solution as is or with minor tweaks. For the retail example above, assuming that online ordering is the primary focus of this business, catalog management may be a supporting subdomain.

-

Generic: When working with business applications, one is required to provide a set of capabilities not directly related to the problem being solved. Consequently, it might suffice to just make use of an off-the-shelf solution. For the retail example above, the identity, auditing and activity tracking subdomains might fall in that category.

| It is important to note that the notion of core vs. supporting vs. generic subdomains is very context specific. What is core for one business may be supporting or generic for another. Identifying and distilling the core domain requires deep understanding and experience of what problem is being attempted to be solved. |

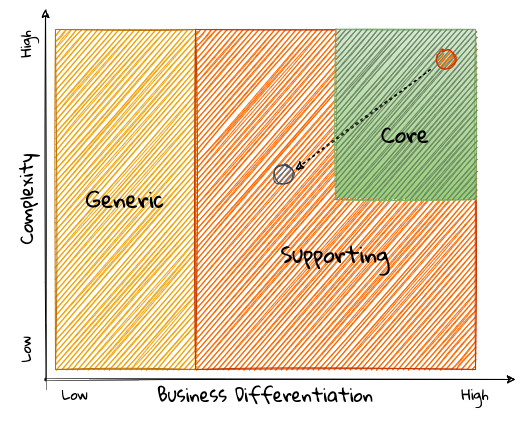

Given that the core subdomain establishes most of the business differentiation, it will be prudent to devote the most amount of energy towards maintaining that differentiation. This is illustrated in the core domain chart here:

Over a period of time, it is only natural that competitors will attempt to emulate your successes. Newer, more efficient methods will arise, reducing the complexity involved, disrupting your core. This may cause the notion of what is currently core, to shift and become a supporting or generic capability as depicted here:

To continue running a successful operation, it is required to constantly innovate in the core. For example, when AWS started the cloud computing business, it only provided simple infrastructure (IaaS) solutions. However, as competitors like Microsoft, Google and others started to catch up, AWS has had to provide several additional value-added services (for example, PaaS, SaaS, etc).

As is evident, this is not just an engineering problem. It requires deep understanding of the underlying business. That’s where domain experts can play a significant role.

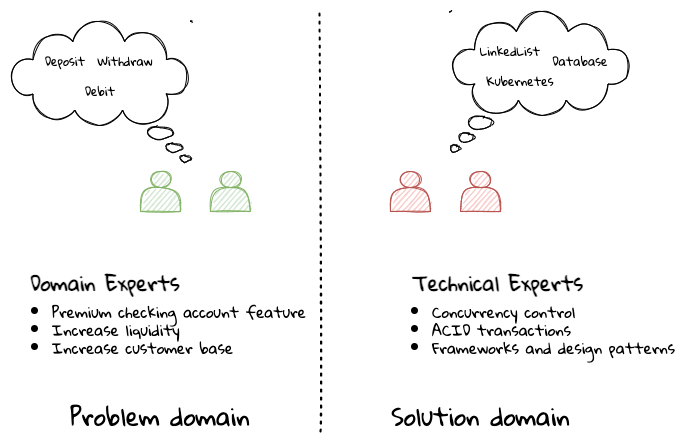

Domain and technical experts

Any modern software team requires expertise in at least two areas — the functionality of the domain and the art of translating it into high quality software. At most organizations, these exist as at least two distinct groups of people.

Domain experts — those who have a deep and intimate understanding of the domain. Domain experts are subject-matter experts (SMEs) who have a very strong grasp of the business. Domain experts may have varying degrees of expertise. Some SMEs may choose to specialize in specific subdomains, while others may have a broader understanding of how the overall business works.

Technical experts on the other hand, enjoy solving specific, quantifiable computer science problems. Often, technical experts do not feel it worth their while understanding the context of the business they work in. Rather, they seem overly eager to only enhance their technical skills that are a continuation of their learnings in academia.

While the domain experts specify the why and the what, technical experts, (software engineers) largely help realize the how. Strong collaboration and synergy between both groups is absolutely essential to ensure sustained high performance and success.

A divide originating in language

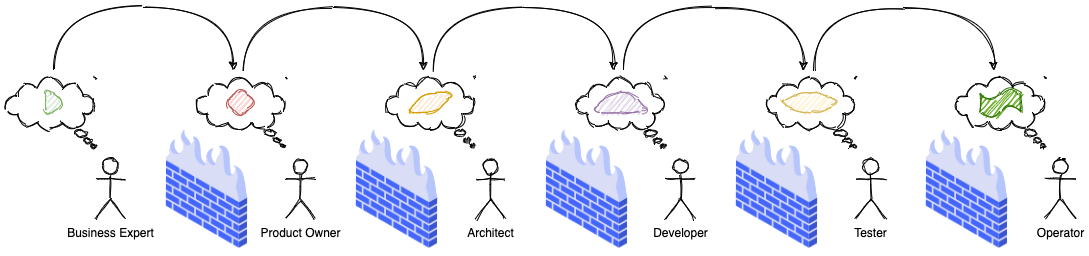

While strong collaboration between these groups is necessary, it is important to appreciate that these groups of people seem to have distinct motivations and differences in thinking. Seemingly, this may appear to be restricted to simple things like differences in their day-to-day language. However, deeper analysis usually reveals a much larger divide in aspects such as goals, motivations etc. This is illustrated in the picture here:

But this is a book primarily focused towards technical experts. Our point is that it is not possible to be successful by just working on technically challenging problems without gaining a sound understanding of the underlying business context.

Every decision we take regarding the organization, be it requirements, architecture, code, etc. has business and user consequences. In order to conceive, architect, design, build and evolve software effectively, our decisions need to aid in creating the optimal business impact. As mentioned above, this can only be achieved if we have a clear understanding of the problem we intend to solve. This leads us to the realization that there exist two distinct domains when arriving at the solution for a problem:

| The use of the word domain in this context is made in an abstract sense — not to be confused with the concept of the business domain introduced earlier. |

Problem domain

A term that is used to capture information that simply defines the problem while consciously avoiding any details of the solution. It includes details like why we are trying to solve the problem, what we are trying to achieve and how it needs to be solved. It is important to note that the why, what and how are from the perspective of the customers/stakeholders, not from the perspective of the engineers providing software solutions to the problem.

Consider the example of a retail bank which already provides a checking account capability for their customers. They want access to more liquid funds. To achieve that, they need to encourage customers to maintain higher account balances. To do that, they are looking to introduce a new product called the premium checking account with additional features like higher interest rates, overdraft protection, no-charge ATM access, etc. The problem domain expressed in the form of why, what and how is shown here:

| Question | Answer |

|---|---|

Why |

Bank needs access to more liquid funds |

What |

Have customers maintain higher account balances |

How |

By introducing a new product — the premium checking account with enhanced features |

Now that we have defined the problem and the motivations surrounding it, let’s examine how it can inform the solution.

Solution domain

A term used to describe the environment in which the solution is developed. In other words, the process of translating requirements into working software (this includes design, development, testing, deployment, etc). Here the emphasis is on the how of the problem being solved from a software implementation perspective. However, it is very difficult to arrive at a solution without having an appreciation of the why and the what.

Building on the previous premium checking account example, the code-level solution for this problem may look something like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

class PremiumCheckingAccountFactory {

Account openPremiumCheckingAccount(Applicant applicant,

MonetaryAmount initialAmount) {

Salary salary = salaryFor(applicant);

if (salary.isBelowThreshold()) {

throw new InsufficientIncomeException(applicant);

}

Account account = Account.createFor(applicant);

account.deposit(initialAmount);

account.activate();

return account;

}

}

This likely appears like a significant leap from a problem domain description, and indeed it is. Before a solution like this can be arrived at, there may need to exist multiple levels of refinement of the problem. As mentioned in the previous chapter, this process of refinement is usually messy and may lead to inaccuracies in the understanding of the problem, resulting in a solution that may be good (for example, one that is sound from an engineering, software architecture standpoint), but not one that solves the problem at hand. Let’s look at how we can continuously refine our understanding by closing the gap between the problem and the solution domain.

Promoting a shared understanding using a ubiquitous language

Previously, we saw how organizational silos can result in valuable information getting diluted. At a credit card company I used to work with, the words plastic, payment instrument, account, PAN (Primary Account Number), BIN (Bank Identification Number), card were all used by different team members to mean the exact same thing - the credit card when working in the same area of the application. On the other hand, a term like user would be used to sometimes mean a customer, a relationship manager, a technical customer support employee. To make matters worse, a lot of these muddled use of terms got implemented in code as well. While this might feel like a trivial thing, it had far-reaching consequences. Product experts, architects, developers, all came and went, each regressively contributing to more confusion, muddled designs, implementation and technical debt with every new enhancement — accelerating the journey towards the dreaded, unmaintainable, big ball of mud.

DDD advocates breaking down these artificial barriers, and putting the domain experts and the developers on the same level footing by working collaboratively towards creating what DDD calls a ubiquitous language — a shared vocabulary of terms, words, phrases to continuously enhance the collective understanding of the entire team. This phraseology is then used actively in every aspect of the solution: the everyday vocabulary, the designs, the code — in short by everyone and everywhere. Consistent use of the common ubiquitous language helps reinforce a shared understanding and produce solutions that better reflect the mental model of the domain experts.

Evolving a domain model and a solution

The ubiquitous language helps establish a consistent albeit informal lingo among team members. To enhance understanding, this can be further refined into a formal set of abstractions — a domain model to represent the solution in software. When a problem is presented to us, we subconsciously attempt to form mental representations of potential solutions. Further, the type and nature of these representations (models) may differ wildly based on factors like our understanding of the problem, our backgrounds and experiences, etc. This implies that it is natural for these models to be different. For example, the same problem can be thought of differently by various team members as shown here:

As illustrated here, the business expert may think of a process model, whereas the test engineer may think of exceptions and boundary conditions to arrive at a test strategy and so on.

| The illustration above is to depict the existence of multiple models. There may be several other perspectives, for example, a customer experience model, an information security model, etc. which are not depicted. |

Care should be taken to retain focus on solving the business problem at hand at all times. Teams will be better served if they expend the same amount of effort modeling business logic as the technical aspects of the solution. To keep accidental complexity in check, it will be best to isolate the infrastructure aspects of the solution from this model. These models can take several forms, including conversations, whiteboard sessions, documentation, diagrams, tests and other forms of architecture fitness functions. It is also important to note that this is not a one-time activity. As the business evolves, the domain model and the solution will need to keep up. This can only be achieved through close collaboration between the domain experts and the developers at all times.

Scope of domain models and the bounded context

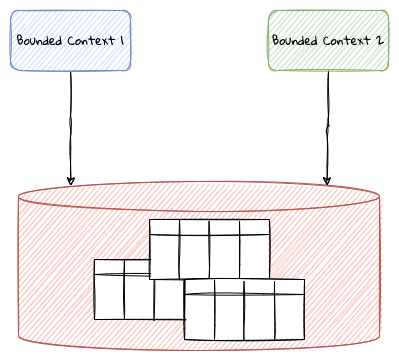

When creating domain models, one of the dilemmas is in deciding how to restrict the scope of these models. One can attempt to create a single domain model that acts as a solution for the entire problem. On the other hand, we may go the route of creating extremely fine-grained models that cannot exist meaningfully without having a strong dependency on others. There are pros and cons in going each way. Whatever be the case, each solution has a scope — bounds to which it is confined to. This boundary is termed as a bounded context.

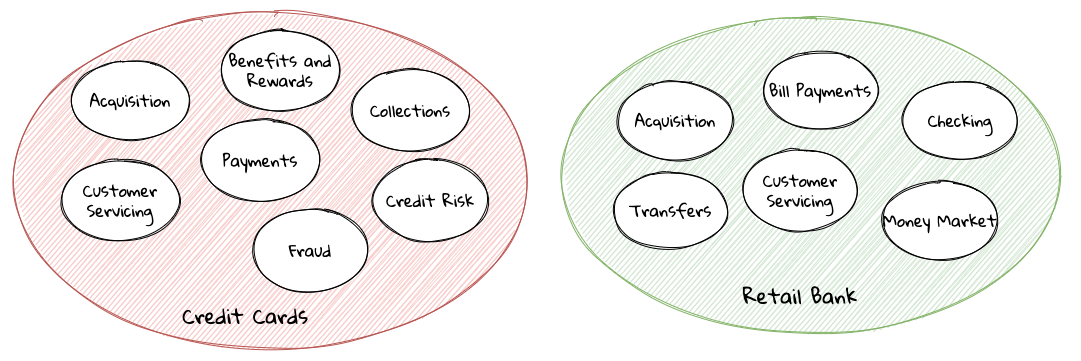

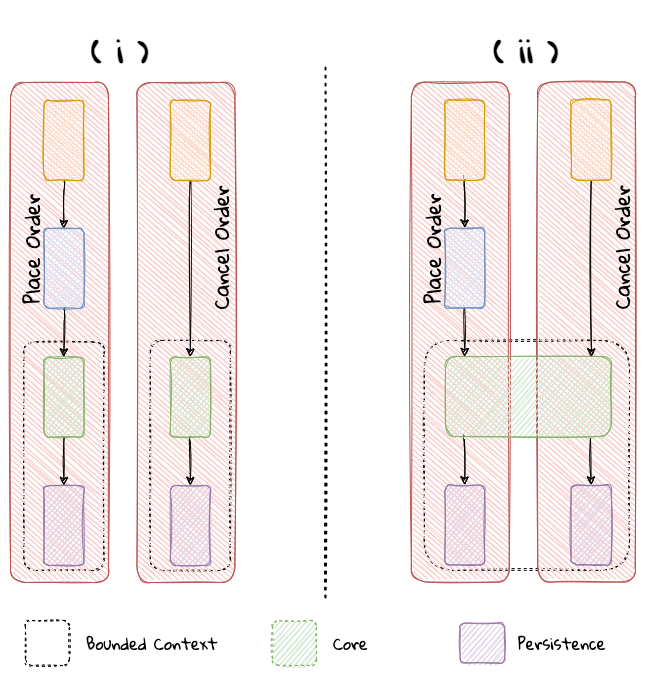

There seems to exist a lot of confusion between the terms subdomains and bounded contexts. What is the difference?It turns out that subdomains are problem space concepts whereas bounded contexts are solution space concepts. This is best explained through the use of an example. Let’s consider the example of a fictitious Acme bank that provides two products: credit cards and retail bank. This may decompose to the following subdomains as depicted here:

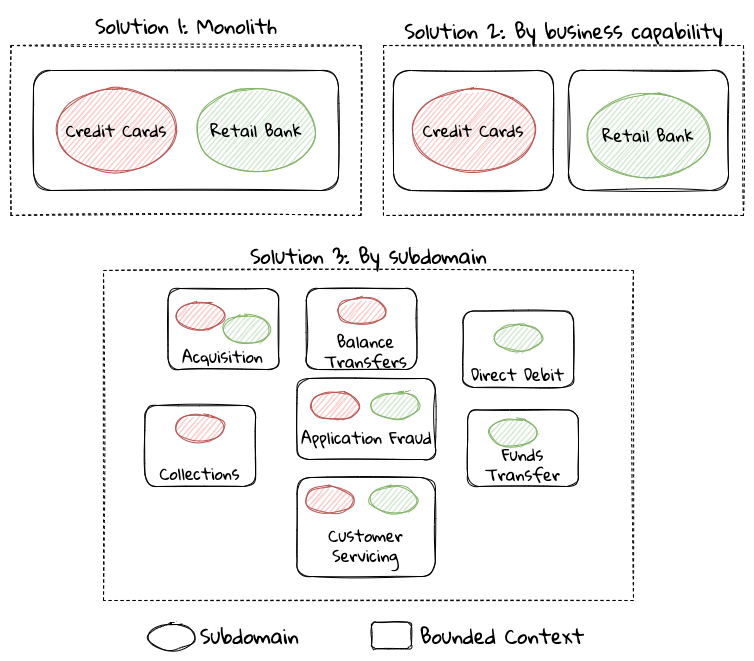

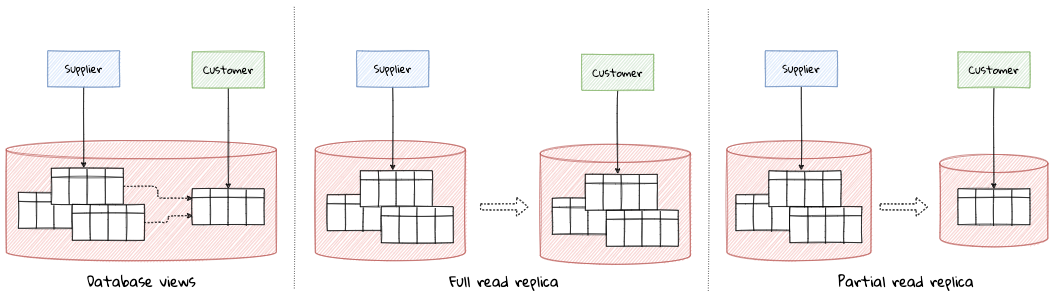

When creating a solution for the problem, many possible solution options exist. We have depicted a few options here:

These are just a few examples of decomposition patterns to create bounded contexts. The exact set of patterns one may choose to use may vary depending on currently prevailing realities like:

-

Current organizational structures

-

Domain experts' responsibilities

-

Key activities and pivotal events

-

Existing applications

| Conway’s Law asserts that organizations are constrained to produce application designs which are copies of their communication structures. Your current organizational structures may not be optimally aligned to your desired solution approach. The inverse Conway maneuver[1] may be applied to achieve isomorphism with the business architecture. |

Whatever be the method used to decompose a problem into a set of bounded contexts, care should be taken to make sure that the coupling between them is kept as low as possible.

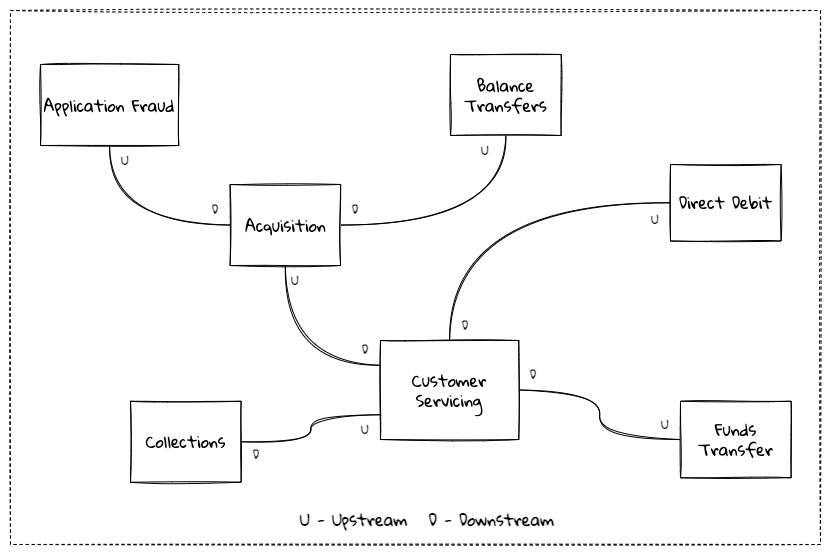

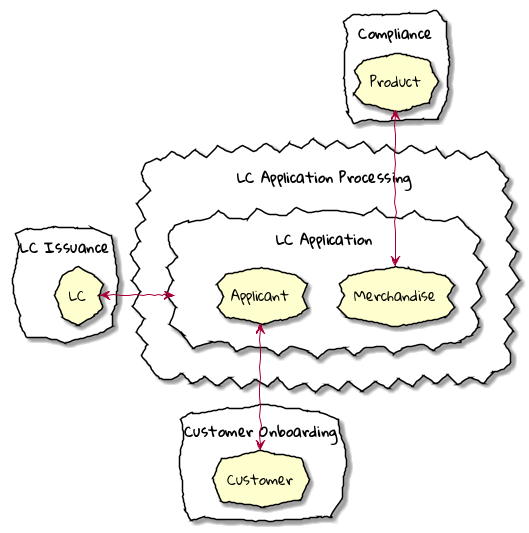

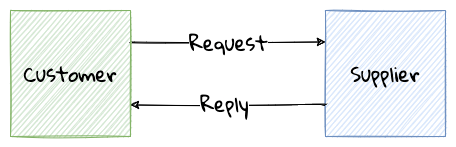

While bounded contexts ideally need to be as independent as possible, they may still need to communicate with each other. When using domain-driven design, the system as a whole can be represented as a set of bounded contexts which have relationships with each other. These relationships define how these bounded contexts can integrate with each other and are called context maps. A sample context map is shown here.

The context map shows the bounded contexts the relationship between them. These relationships can be a lot more nuanced than what is depicted here. We will discuss more details on context maps and communication patterns in Chapter 9: Integrating with external systems.

We have now covered a catalog of concepts that are core to the strategic design tenets of domain-driven design. Let’s look at some tools that can help expedite this process.

In subsequent chapters we will reinforce all the concepts introduced here in a lot more detail.

In the next section, we will look at why the ideas of DDD, introduced all those years ago, are still very relevant. If anything, we will look at why they are becoming even more relevant now than ever.

1.4.2. Implementing the solution using tactical design

In the previous section, we have seen how we can arrive at a shared understanding of the problem using the strategic design tools. We need to use this understanding to create a solution. DDD’s tactical design aspects, tools and techniques help translate this understanding into working software. Let’s look at these aspects in detail. In part 2 of the book, we will apply these to solve a real-world problem.

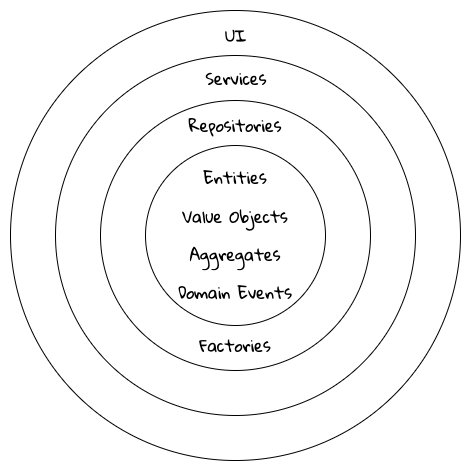

It is convenient to think of the tactical design aspects as depicted in this picture:

Let’s look at the definitions of these elements.

Value objects

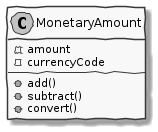

Value objects are immutable objects that encapsulate the data and behavior of one or more related attributes. It may be convenient to think of value objects as named primitives. For example, consider a MonetaryAmount value object. A simple implementation can contain two attributes — an amount and a currency code. This allows encapsulation of behavior such as adding two MonetaryAmount objects safely as shown here:

MonetaryAmount value objectThe effective use of value objects helps protect from the primitive obsession[2] anti-pattern, while increasing clarity. It also allows composing higher level abstractions using one or more value objects. It is important to note that value objects do not have the notion of identity. That is, two value having the same value are treated equal. So two MonetaryAmount objects having the same amount and currency code will be considered equal. Also, it is important to make value objects immutable. That is, a need to change any of the attributes should result in the creation of a new attribute.

It is easy to dismiss the use of value objects as a mere engineering technique, but the consequences of (not) using them can be far-reaching. In the MonetaryAmount example above, it is possible for the amount and currency code to exist as independent attributes. However, the use of the MonetaryAmount enforces the notion of the ubiquitous language. Hence, we recommend the use of value objects as a default instead of using primitives.

| Critics may be quick to point out problems such as class explosion and performance issues. But in our experience, the benefits usually outweigh the costs. But it may be necessary to re-examine this approach if problems occur. |

Entities

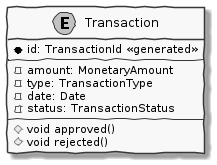

An entity is an object with a unique identity and encapsulates the data and behaviour of its attributes. It may be convenient to view entities as a collection of other entities and value objects that need to be grouped together. A very simple example of an entity is shown here:

Transaction entityIn contrast to a value object, entities have the notion of a unique identifier. This means that two Transaction entities having the same underlying values, but having a different identifier (id) value, will be considered different. On the other hand, two entity instances having the same value for the identifier are considered equal. Furthermore, unlike value objects, entities are mutable. That is, their attributes can and will change over time.

The concept of value objects and entities depends on the context within which they are used. In an order management system, the Address may be implemented as a value object in the E-Commerce bounded context, whereas it may be needed to be implemented as an entity in the Order Fulfillment bounded context.

| It is common to collectively refer to entities and value objects as domain objects. |

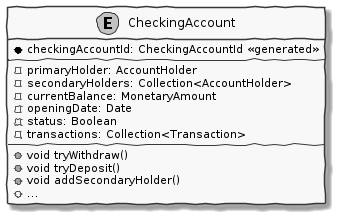

Aggregates

As seen above, entities are hierarchical, in that they can be composed of one more children. Fundamentally, an aggregate:

-

Is an entity usually composed of other child entities and value objects.

-

Encapsulates access to child entities by exposing behavior (usually referred to as commands).

-

Is a boundary that is used to enforce business invariants (rules) in a consistent manner.

-

Is an entry point to get things done within a bounded context.

Consider the example of a CheckingAccount aggregate:

CheckingAccount aggregateNote how the CheckingAccount is composed using the AccountHolder and Transaction` entities among other things. In this example, let’s assume that the overdraft feature (ability to hold a negative account balance) is only available for high net-worth individuals (HNI). Any attempt to change the currentBalance needs to occur in the form of a unique Transaction for audit purposes — irrespective of its outcome. For this reason, the CheckingAccount aggregate makes use of the Transaction entity. Although the Transaction has approve and reject methods as part of its interface, only the aggregate has access to these methods. In this way, the aggregate enforces the business invariant while maintaining high levels of encapsulation. A potential implementation of the tryWithdraw method is shown here:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

class CheckingAccount {

private AccountHolder primaryHolder; (1)

private Collection<Transaction> transactions; (1)

private MonetaryAmount currentBalance; (1)

// Other code omitted for brevity

void tryWithdraw(MonetaryAmount amount) { (2)

MonetaryAmount newBalance = currentBalance.subtract(amount);

Transaction transaction = add(Transaction.withdrawal(id, amount));

if (primaryHolder.isNotHNI() && newBalance.isOverdrawn()) { (3)

transaction.rejected();

} else {

transaction.approved();

currentBalance = newBalance;

}

}

}

| 1 | The CheckingAccount aggregate is composed of child entities and value objects. |

| 2 | The tryWithdraw method acts as a consistency boundary for the operation. Irrespective of the outcome (approved or rejected), the system will remain in a consistent state. In other words, the currentBalance can change only within the confines of the CheckingAccount aggregate. |

| 3 | The aggregate enforces the appropriate business invariant (rule) to allow overdrafts only for HNIs. |

| Aggregates are also referred to as aggregate roots. That is, the object that is at the root of the entity hierarchy. We use these terms synonymously in this book. |

Domain events

As mentioned above, aggregates dictate how and when state changes occur. Other parts of the system may be interested in knowing about the occurrence of changes that are significant to the business. For example, an order being placed or a payment being received, etc. Domain events are the means to convey that something business significant has occurred. It is important to differentiate between system events and domain events. For example, in the context of a retail bank, a row was saved in the database, or a server ran out of disk space, etc. may classify as system events, whereas a deposit was made to a checking account, fraudulent activity was detected on a transaction, etc. could be classified as domain events. In other words, domain events are things that domain experts care about.

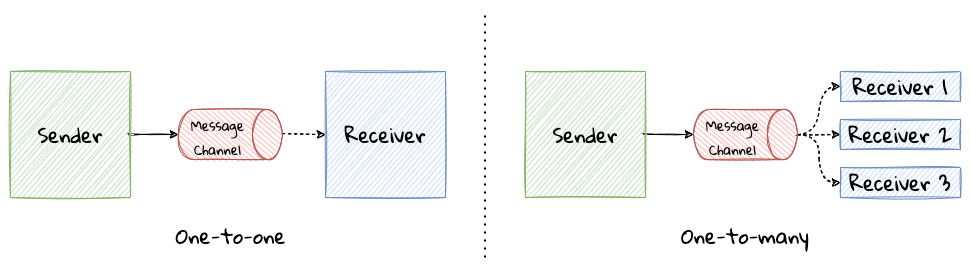

It may be prudent to make use of domain events to reduce the amount of coupling between bounded contexts, making it a critical building block of domain-driven design.

Repositories

Most businesses require durability of data. For this reason, aggregate state needs to be persisted and retrieved when needed. Repositories are objects that enable persisting and loading aggregate instances. This is well documented in Martin Fowler’s Patterns of Enterprise Application Architecture book as part of the repository[3] pattern. It is pertinent to note that we are referring to aggregate repositories here, not just any entity repository. The singular purpose of this repository is to load a single instance of an aggregate using its identifier. It is important to note that this repository does not support finding aggregate instances using any other means. This is because, business operations happen as part of manipulating a single instance of the aggregate within its bounded context.

Factories

In order to work with aggregates and value objects, instances of these need to be constructed. In simple cases, it might suffice to use a constructor to do so. However, aggregate and value object instances can become quite complex depending on amount the state they encapsulate. In such cases, it may be prudent to consider delegating object construction responsibilities to a factory external to the aggregate/value object. We make use of the static factory method, builder, and dependency injection quite commonly in our day-to-day. Joshua Bloch discusses several variations of this pattern in Chapter 2: Creating and destroying objects in his Effective Java book.

Services

When working within the confines of a single bounded context, the public interface (commands) of the aggregate provides a natural API. However, more complex business operations may require interacting with multiple bounded contexts and aggregates. In other words, we may find ourselves in situations where certain business operations do not fit naturally with any single aggregate. Even if interactions are limited to a single bounded context, there may be a need to expose that functionality in an implementation-neutral manner. In such cases, one may consider the use of objects termed as services. Services come in at least 3 flavors:

-

Domain services: To enable coordinating operations among more than one aggregate. For example, transferring money between two checking accounts at a retail bank.

-

Infrastructure services: To enable interactions with a utility that is not core to the business. For example, logging, sending emails, etc. at the retail bank.

-

Application services: Enable coordination between domain services, infrastructure services and other application services. For example, sending email notifications after a successful inter-account money transfer.

Services can also be stateful or stateless. It is best to allow aggregates to manage state making use of repositories, while allowing services to coordinate and/or orchestrate business flows. In complex cases, there may be a need to manage the state of the flow itself. We will look at more concrete examples in part 2 of this book.

| It may become tempting to implement business logic almost exclusively using services — inadvertently leading to the anemic domain model[4] anti-pattern. It is worthwhile striving to encapsulate business logic within the confines of aggregates as a default. |

1.5. Why is DDD Relevant? Why Now?

He who has a why to live for can bear almost anyhow.

In a lot of ways, domain-driven design was way ahead of its time when Eric Evans introduced the concepts and principles back in 2003. DDD seems to have gone from strength to strength. In this section, we will examine why DDD is even more relevant today, than it was when Eric Evans wrote his book on the subject way back in 2003.

1.5.1. Rise of Open Source

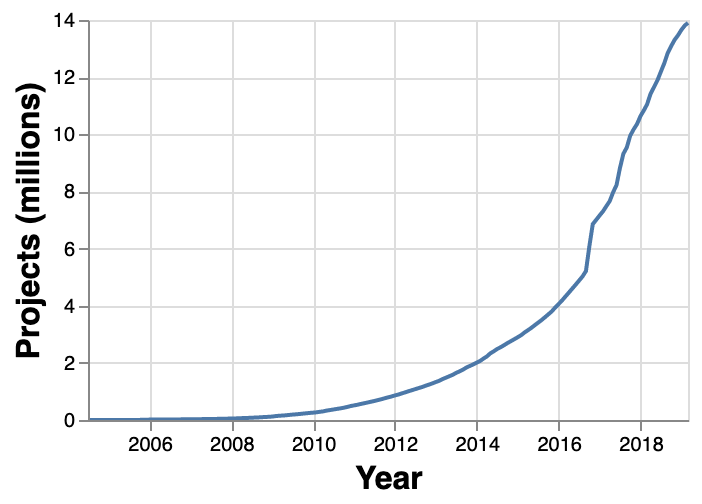

Eric Evans, during his keynote address at the Explore DDD conference in 2017, lamented about how difficult it was to implement even the simplest concepts like immutability in value objects when his book had released. In contrast though, nowadays, it’s simply a matter of importing a mature, well documented, tested library like Project Lombok or Immutables to be productive, literally in a matter of minutes. To say that open source software has revolutionized the software industry would be an understatement! At the time of this writing, the public maven repository (https://mvnrepository.com) indexes no less than a staggering 18.3 million artifacts in a large assortment of popular categories ranging from databases, language runtimes to test frameworks and many many more as shown in the chart below:

Java stalwarts like the spring framework and more recent innovations like spring boot, quarkus, etc. make it a no-brainer to create production grade applications, literally in a matter of minutes. Furtheremore, frameworks like Axon, Lagom, etc. make it relatively simple to implement advanced architecture patterns such are CQRS, event sourcing, that are very complementary to implementing DDD-based solutions.

1.5.2. Advances in Technology

DDD by no means is just about technology, it could not be completely agnostic to the choices available at the time. 2003 was the heyday of heavyweight and ceremony-heavy frameworks like J2EE (Java 2 Enterprise Edition), EJBs (Enterprise JavaBeans), SQL databases, ORMs (Object Relational Mappers) and the like — with not much choice beyond that when it came to enterprise tools and patterns to build complex software — at least out in the public domain. The software world has evolved and come a very long way from there. In fact, modern game changers like Ruby on Rails and the public cloud were just getting released. In contrast though, we now have no shortage of application frameworks, NoSQL databases, programmatic APIs to create infrastructure components with a lot more releasing with monotonous regularity.

All these innovations allow for rapid experimentation, continuous learning and iteration at pace. These game changing advances in technology have also coincided with the exponential rise of the internet and ecommerce as viable means to carry out successful businesses. In fact the impact of the internet is so pervasive that it is almost inconceivable to launch businesses without a digital component being an integral component. Finally, the consumerization and wide scale penetration of smartphones, IoT devices and social media has meant that data is being produced at rates inconceivable as recent as a decade ago. This means that we are building for and solving the most complicated problems by several orders of magnitude.

1.5.3. Rise of Distributed Computing

There was a time when building large monoliths was very much the default. But an exponential rise in computing technology, public cloud, (IaaS, PaaS, SaaS, FaaS), big data storage and processing volumes, which has coincided with an arguable slowdown in the ability to continue creating faster CPUs, have all meant a turn towards more decentralized methods of solving problems.

Domain-driven design with its emphasis on dealing with complexity by breaking unwieldy monoliths into more manageable units in the form of subdomains and bounded contexts, fits naturally to this style of programming. Hence, it is no surprise to see a renewed interest in adopting DDD principles and techniques when crafting modern solutions. To quote Eric Evans, it is no surprise that Domain-Driven Design is even more relevant now than when it was originally conceived!

1.6. Summary

In this chapter we examined some common reasons for why software projects fail. We saw how inaccurate or misinterpreted requirements, architecture (or the lack thereof), excessive technical debt, etc. can get in the way of meeting business goals and success.

We looked at the basic building blocks of domain-driven design such as domains, subdomains, ubiquitous language, domain models, bounded contexts and context maps. We also examined why the principles and techniques of domain-driven design are still very much relevant in the modern age of microservices and serverless. You should now be able to appreciate the basic terms of DDD and understand why it is important in today’s context.

In the next chapter we will take a closer look at the real-world mechanics of domain-driven design. We will delve deeper into the strategic and tactical design elements of DDD and look at how using these can help form the basis for better communication and create more robust designs.

1.7. Further Reading

| Title | Author | Location |

|---|---|---|

Pulse of the Profession - 2017 |

PMI |

|

Pulse of the Profession - 2020 |

PMI |

https://www.pmi.org/learning/library/forging-future-focused-culture-11908 |

Project success: Definitions and Measurement Techniques |

PMI |

https://www.pmi.org/learning/library/project-success-definitions-measurement-techniques-5460 |

Project success: definitions and measurement techniques |

JK Pinto, DP Slevin |

https://www.pmi.org/learning/library/project-success-definitions-measurement-techniques-5460 |

Analysis Paralysis |

Ward Cunningham |

|

Big Design Upfront |

Ward Cunningham |

|

Enterprise Modeling Anti-Patterns |

Scott W. Ambler |

http://agilemodeling.com/essays/enterpriseModelingAntiPatterns.htm |

A Project Manager’s Guide To 42 Agile Methodologies |

Henny Portman |

|

Domain-Driven Design Even More Relevant Now |

Eric Evans |

|

Introducing Deliberate Discovery |

Dan North |

https://dannorth.net/2010/08/30/introducing-deliberate-discovery/ |

No Silver Bullet — Essence and Accident in Software Engineering |

Fred Brooks |

http://faculty.salisbury.edu/~xswang/Research/Papers/SERelated/no-silver-bullet.pdf |

Mastering Non-Functional Requirements |

Sameer Paradkar |

https://www.packtpub.com/product/mastering-non-functional-requirements/9781788299237 |

Big Ball Of Mud |

Brian Foote & Joseph Yoder |

|

The Forgotten Layer of the Test Automation Pyramid |

Mike Cohn |

https://www.mountaingoatsoftware.com/blog/the-forgotten-layer-of-the-test-automation-pyramid |

Tech debt: Reclaiming tech equity |

Vishal Dalal et al |

|

Is High Quality Software Worth the Cost |

Martin Fowler |

2. Where and how does DDD fit?

We won’t be distracted by comparison if we are captivated with purpose.

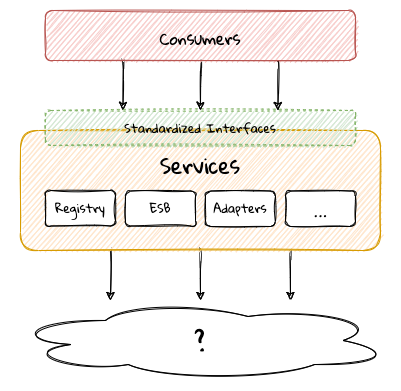

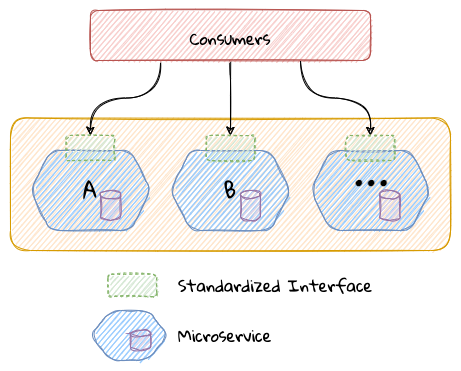

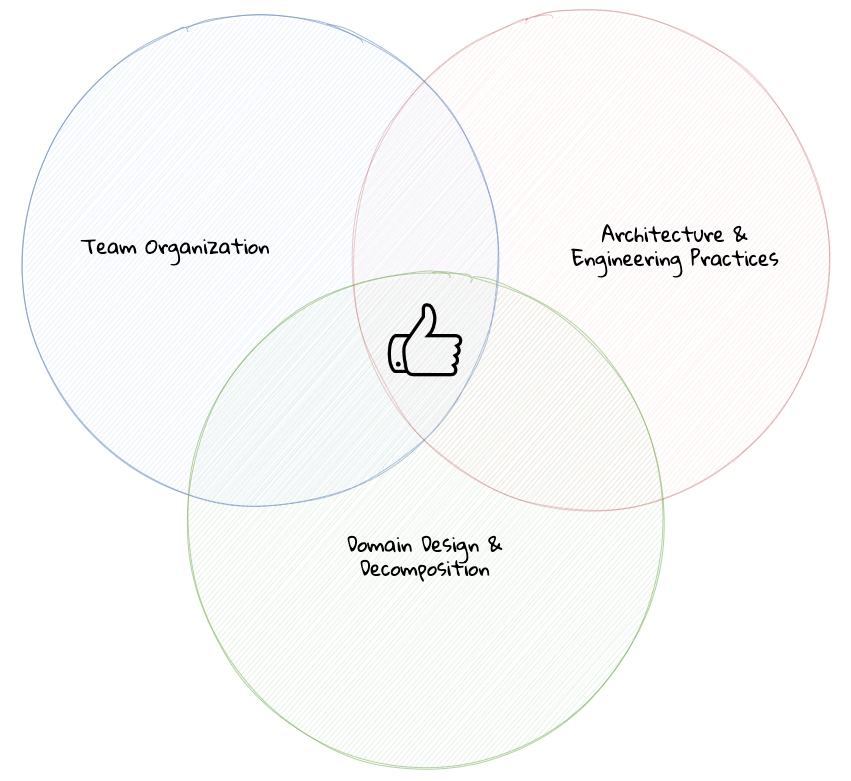

Software architecture refers to the fundamental structures of a software system and the discipline of creating such structures and systems. Over the years, we have accumulated a series of architecture styles and programming paradigms to help us deal with system complexity. In this chapter we will examine how DDD can be applied in a manner that is complementary to these architecture styles and programming paradigms. We will also look at how/where it fits in the overall scheme of things when crafting a software solution.

At the end of this chapter, you will gain an appreciation of a variety of architecture style and programming paradigms, along with some pitfalls to watch out for, when applying them. You will also understand the role that DDD plays in augmenting each of these.

2.1. Architecture Styles

Domain-driven design presents a set of architecture tenets in the form of the strategic and tactical design elements. This enables decomposing large, potentially unwieldy business subdomains into well-factored, independent bounded contexts. One of the great advantages of DDD is that it does not require the use of any specific architecture. However, the software industry has been using a plethora of architecture styles over a period of the last several years. Let’s look at how DDD can be used in conjunction with a set of popular architecture styles to arrive at better solutions.

2.1.1. Layered Architecture

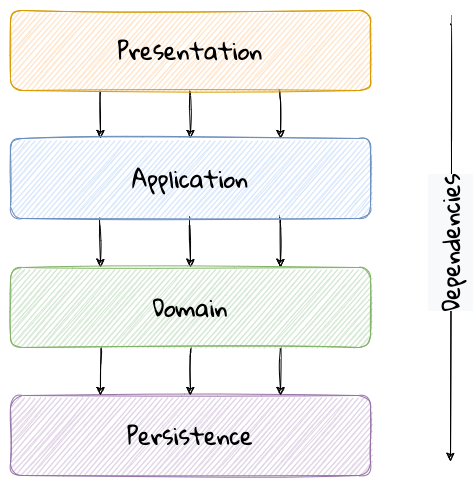

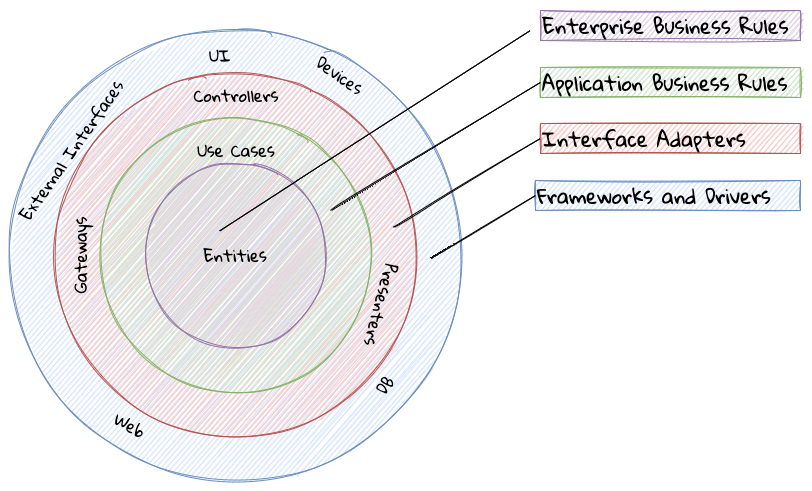

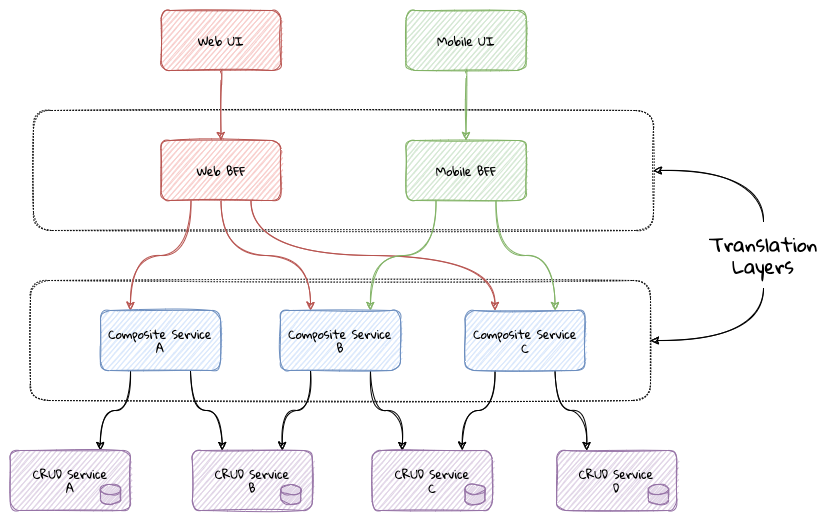

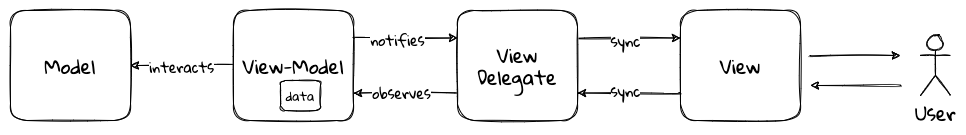

The layered architecture is one of the most common architecture styles where the solution is typically organized into four broad categories: presentation, application, domain and persistence. Each of the layers provides a solution to a particular concern it represents as shown here:

The main idea behind the layered architecture is a separation of concerns — where the dependencies between layers are unidirectional (from the top to the bottom). For example, the domain layer can depend on the persistence layer, not the other way round. In addition, any given layer typically accesses the layer immediately beneath it without bypassing layers in between. For example, the presentation layer may access the domain layer only through the application layer.

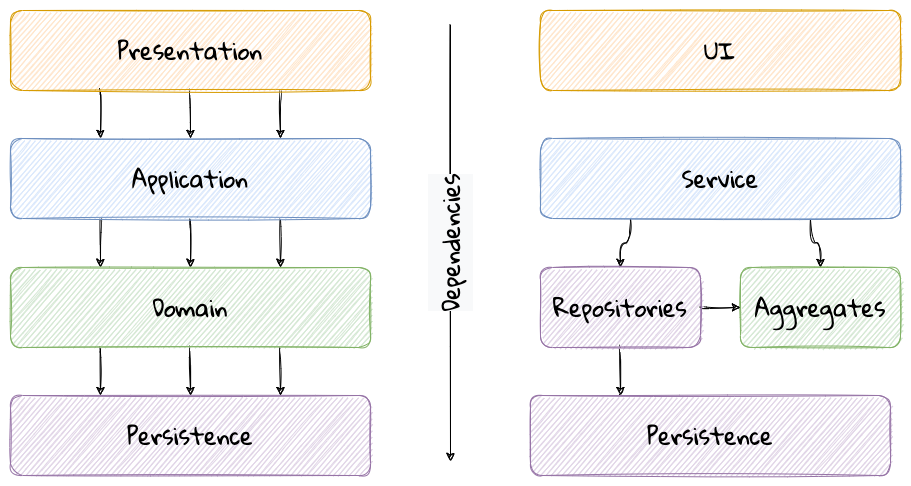

This structure enables looser coupling between layers and allows them to evolve independently of each other. The idea of the layered architecture fits very well with domain-driven design’s tactical design elements as depicted here:

DDD actively promotes the use of a layered architecture, primarily because it makes it possible to focus on the domain layer in isolation of other concerns like how to information gets displayed, how end-to-end flows are managed, how data is stored and retrieved, etc. From that perspective, solutions that apply DDD tend to naturally be layered as well.

Notable variations

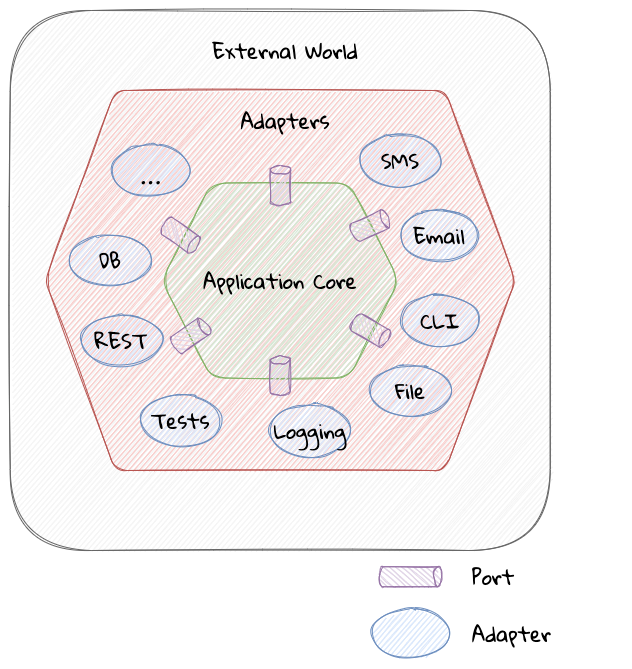

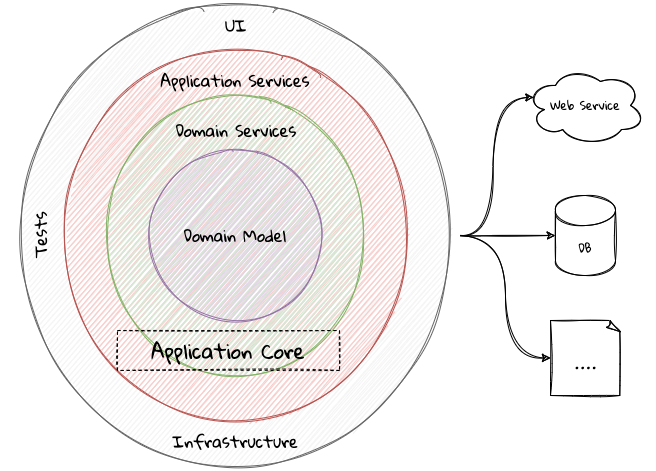

A variation of the layered architecture was invented by Alistair Cockburn, which he originally called the hexagonal architecture[5] (alternatively called the ports and adapters architecture). The idea behind this style was to avoid inadvertent dependencies between layers (as could occur in the layered architecture), specifically between the core of the system and the peripheral layers. The main idea here is to make use of interfaces (ports) exclusively within the core to enable modern drivers such as testing and looser coupling. This allows the core to be developed and evolved independently of the non-core parts and the external dependencies. Integration with real-world components such as a database, file systems, web services, etc. is achieved through concrete implementations of the ports termed as adapters. The use of interfaces within the core enables much easier testing of the core in isolation of the rest of the system using mocks and stubs. It is also common to use dependency injection frameworks to dynamically swap out implementations of these interfaces when working with the real system in an end-to-end environment. A visual representation of the hexagonal architecture is shown here: